PBS Job Arrays

Overview

Teaching: 25 min

Exercises: 5 minQuestions

How to run multiple similar jobs

Objectives

Discover PBS job arrays

Submit an array job

This episode introduces PBS Array Jobs, which can submit multiple jobs at once.

PBS Job Arrays

You’ll often want to run similar jobs many times, or the same project pipeline on multiple similar sets of data. Submitting a new PBS job for each of these runs quickly gets cumbersome, so you might try automating this process. PBS provides an easy way to do just this with its array jobs.

An array job is simply a job duplicated many times. It is organised as an array, with each job having in the array its own array index, which is also stored in an environment variable PBS_ARRAY_INDEX. Array jobs are created using the PBS directive -J followed by a range of array indices, eg

#PBS -J 1-100

#PBS -J 4-25:3

The first example directive above will launch 100 copies of the job defined in the PBS script, with each copy having an array index equal to an integer from 1 to 100. The second example will launch 8 copies of the job defined in the script, with each copy having an array index equal to an integer from 4 to 25 in steps of 3.

In general, the argument to the -J directive is i-f[:s] for non-negative integers i<f, s, which creates rising indices starting from initial value i, to a maximum final value f, in steps of s. The step argument is optional, and defaults to 1.

A quick and pointless example of an array job could be submitting the following script. Make a new file with nano called hello_array.sh and add the following two lines

#!/bin/bash

echo "Hello, world. I am job $PBS_ARRAY_INDEX"

Now submit the script to the queue via:

qsub -P Training -J 1-3 hello_array.sh

This would simply submit 3 jobs in an array, with each echoing the string “Hello, world! I am number $PBS_ARRAY_INDEX.”

Job arrays are marked in the job queue with a ‘B’ for ‘batch’ rather than an ‘R’ when running. They also have slightly different JobIDs than single jobs, in that their ID numbers are followed by a pair of square brackets []. Ie, an array job might have JobID 1578913[], where as a single job would have JobID 1578913. This is important, and the [] must be included when identifying the job to a command such as qstat.

Submitting an array job

Let’s now practice submitting an actual array job. Move into the data directory we extracted earlier, and then into the Demo-a folders

cd Automation/Demo-a

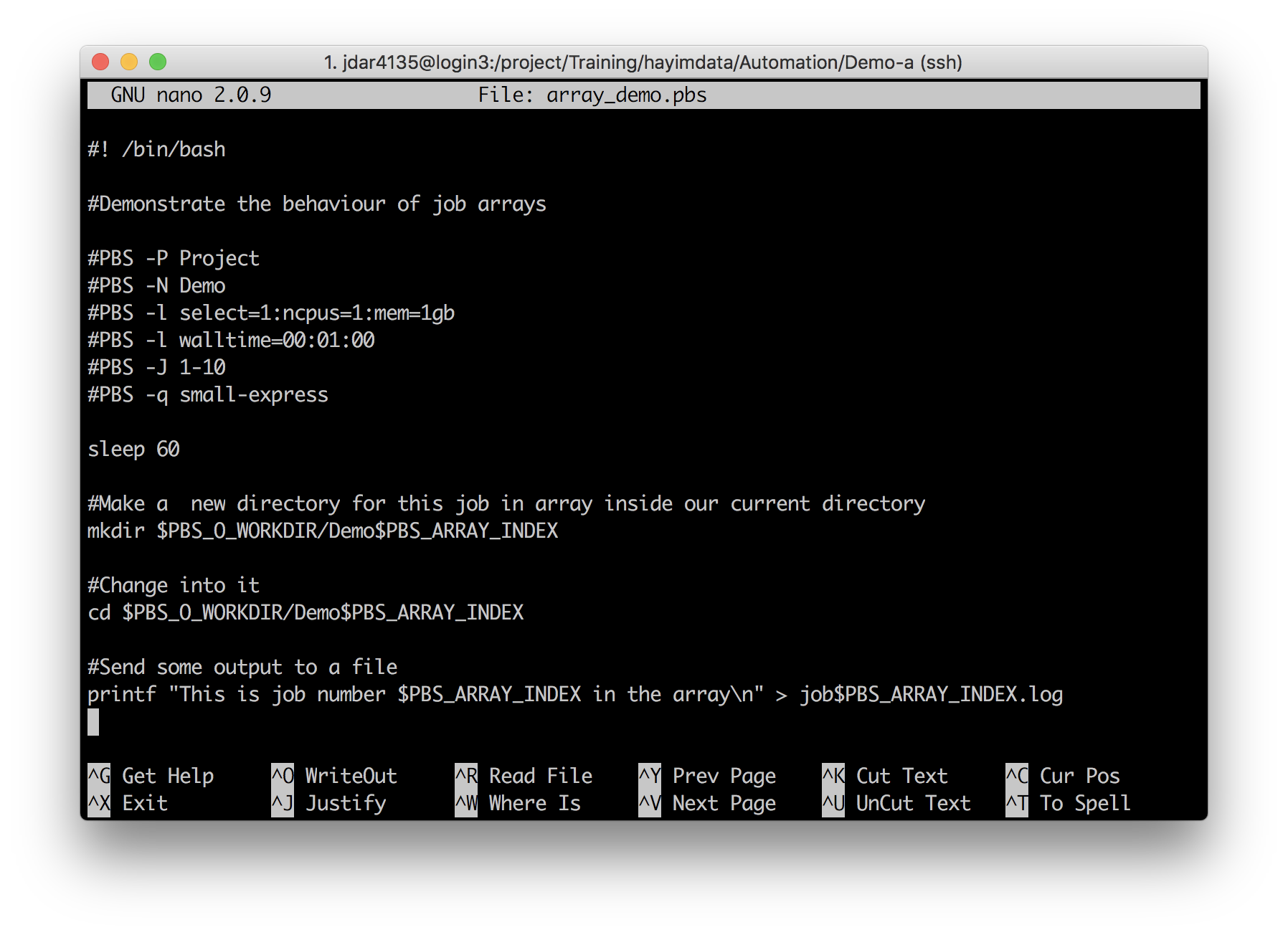

Open the script array_demo.pbs in nano or your preferred editors

nano array_demo.pbs

Make any required edits necessary to run this script, and then submit it via qsub.

Change #1

Specify your project.

Use the

-PPBS directive to specify the Training project, using its short name.#PBS -P Training

Change #2

Give your job a name

Use the

-NPBS directive to give your job an easily identifiable name. You might run lots of jobs at the same time, so you want to be able to keep track of them!#PBS -N DemoHayASubstitute a job name of your choice!

Change #3

Tailor your resource requests.

Use the

-lPBS directive to request appropriate compute resources and walltime for your job.This script isn’t doing much! Leave as is, with 1 minute of walltime, 1 GB of RAM (the minimum on Artemis), and 1 CPU.

#PBS -l select=1:ncpus=1:mem=1GB #PBS -l walltime=00:01:00

Change #4

If you are using the Training Scheduler1 then you do not have access to all the queues. You can submit jobs to defaultQ and dtq only.

In the normal Artemis environment you can submit to defaultQ, dtq, small-express, scavenger, and possibly some strategic allocation queues you may have access to.

#PBS -q defaultQ

Change #5 (optional)

Set up email notifications for your job.

Use the

-Mand-mPBS directive to specify a destination email address, and the events you wish to be notified about. You can receive notifications for when your job (b)egins, (e)nds or (a)borts.#PBS -M hayim.dar@sydney.edu.au #PBS -m abe

What will this job do? Firstly, note the array job directive initialising 10 copies of this job, with indices 1,2,…,10:

#PBS -J 1-10

Secondly, can you see how each copy of this job will execute differently? How is this achieved?

Answer

With the

PBS_ARRAY_INDEXvariable.Commands in the array_demo.pbs script refer to the job’s array index to differentiate between the copies.

mkdir $PBS_O_WORKDIR/Demo$PBS_ARRAY_INDEXcd $PBS_O_WORKDIR/Demo$PBS_ARRAY_INDEXprintf "This is job number $PBS_ARRAY_INDEX in the array\n" > job$PBS_ARRAY_INDEX.log

Submit the job and then take a look at your job status:

qstat -u $USER

[jdar4135@login3 Demo-a]$ qstat -u $USER

pbsserver:

Req'd Req'd Elap

Job ID Username Queue Jobname SessID NDS TSK Memory Time S Time

--------------- -------- -------- ---------- ------ --- --- ------ ----- - -----

2596901[].pbsse jdar4135 small-ex DemoHayA -- 1 1 1gb 00:00 Q --

What do you notice? What would be the output of this command? (user your jobID!)

qstat 596901

[jdar4135@login3 Demo-a]$ qstat 2596901

qstat: Unknown Job Id 596901.pbsserver

qstat and -t flag for array jobs

What went wrong? We left off the [].

qstat 596901[]

To query all subjobs in use the -t flag with qstat -t jobid[].

[jdar4135@login3 Demo-a]$ qstat -t 2596901[]

Job id Name User Time Use S Queue

---------------- ---------------- ---------------- -------- - -----

2596901[].pbsserv DemoHayA jdar4135 0 B small-express

2596901[1].pbsser DemoHayA jdar4135 0 X small-express

2596901[2].pbsser DemoHayA jdar4135 0 X small-express

2596901[3].pbsser DemoHayA jdar4135 0 X small-express

2596901[4].pbsser DemoHayA jdar4135 0 X small-express

2596901[5].pbsser DemoHayA jdar4135 0 X small-express

2596901[6].pbsser DemoHayA jdar4135 0 X small-express

2596901[7].pbsser DemoHayA jdar4135 0 X small-express

2596901[8].pbsser DemoHayA jdar4135 0 X small-express

2596901[9].pbsser DemoHayA jdar4135 00:00:00 R small-express

2596901[10].pbsse DemoHayA jdar4135 00:00:00 R small-express

Note that for subjobs of an array, complete status is not indicated by ‘F’ but ‘X’, meaning ‘exited’. You can query individual subjobs by their specific index within the array:

qstat -t 2596901[4]

jdar4135@login3 Demo-a]$ qstat -t 2596901[4]

Job id Name User Time Use S Queue

---------------- ---------------- ---------------- -------- - -----

2596901[4].pbsser DemoHayA jdar4135 0 X small-express

As usual, once your array job has completed, have a look to see that it ran as expected:

- Check for errors:

cat DemoHayA.e*

- Check for Exit Status of 0:

grep -se "Exit Status" *

- Check for log files:

[jdar4135@login1 Demo-a]$ ls

array_demo.pbs DemoHayA.e2596901.1 DemoHayA.o2596901.10 DemoHayA.o2596901.6

Demo1 DemoHayA.e2596901.10 DemoHayA.o2596901.10_usage DemoHayA.o2596901.6_usage

Demo10 DemoHayA.e2596901.2 DemoHayA.o2596901.1_usage DemoHayA.o2596901.7

Demo2 DemoHayA.e2596901.3 DemoHayA.o2596901.2 DemoHayA.o2596901.7_usage

Demo3 DemoHayA.e2596901.4 DemoHayA.o2596901.2_usage DemoHayA.o2596901.8

Demo4 DemoHayA.e2596901.5 DemoHayA.o2596901.3 DemoHayA.o2596901.8_usage

Demo5 DemoHayA.e2596901.6 DemoHayA.o2596901.3_usage DemoHayA.o2596901.9

Demo6 DemoHayA.e2596901.7 DemoHayA.o2596901.4 DemoHayA.o2596901.9_usage

Demo7 DemoHayA.e2596901.8 DemoHayA.o2596901.4_usage

Demo8 DemoHayA.e2596901.9 DemoHayA.o2596901.5

Demo9 DemoHayA.o2596901.1 DemoHayA.o2596901.5_usage

Note how each subjob has its own set of log files, indicated by the .N suffix corresponding to its array index.

- Check to see if expected files were created:

ls Demo*

cat Demo*/*.log

[jdar4135@login1 Demo-a]$ ls Demo*/*.log

Demo10/job10.log Demo1/job1.log Demo2/job2.log Demo3/job3.log Demo4/job4.log Demo5/job5.log Demo6/job6.log Demo7/job7.log Demo8/job8.log Demo9/job9.log

[jdar4135@login1 Demo-a]$ cat Demo*/*.log

This is job number 10 in the array

This is job number 1 in the array

This is job number 2 in the array

This is job number 3 in the array

This is job number 4 in the array

This is job number 5 in the array

This is job number 6 in the array

This is job number 7 in the array

This is job number 8 in the array

This is job number 9 in the array

Array job log redirection!

We’re going to try that again, but with a small modification. The outputs from our first array job were all piped to different locations, using the > redirect

printf "This is job number $PBS_ARRAY_INDEX in the array\n" > job$PBS_ARRAY_INDEX.log

This meant that the standard output log files generated by the PBS Scheduler were empty, since the output of printf was sent elsewhere. However, we can customise PBS log files directly using the subjob array indices themselves.

Head to the Demo-b folder and open the array_demo.pbs script

cd ../Automation/Demo-b

nano array_demo.pbs

Make any required edits necessary to run this script, and then submit it via qsub.

Change #1

Specify your project.

Use the

-PPBS directive to specify the Training project, using its short name.#PBS -P Training

Change #2

Give your job a name

Use the

-NPBS directive to give your job an easily identifiable name. You might run lots of jobs at the same time, so you want to be able to keep track of them!#PBS -N DemoHayBSubstitute a job name of your choice!

Change #3

Tailor your resource requests.

Use the

-lPBS directive to request appropriate compute resources and wall-time for your job.This script isn’t doing much! Leave as is, with 1 minute of walltime, 1 GB RAM (the minimum on Artemis), and 1 CPU.

#PBS -l select=1:ncpus=1:mem=1GB #PBS -l walltime=00:01:00

Change #4

If you are using the Training Scheduler1 then you do not have access to all the queues. You can submit jobs to defaultQ and dtq only.

In the normal Artemis environment you can submit to defaultQ, dtq, small-express, scavenger, and possibly some strategic allocation queues you may have access to.

#PBS -q defaultQ

Change #5 (optional)

Set up email notifications for your job.

Use the

-Mand-mPBS directive to specify a destination email address, and the events you wish to be notified about. You can receive notifications for when your job (b)egins, (e)nds or (a)borts.#PBS -M hayim.dar@sydney.edu.au #PBS -m abe

What has changed compared to the last job we ran?

Answer

The

PBS_ARRAY_INDEXvariable has been used to modify the naming of the PBS job logs, and the job output is now printed to the output log file, instead of being piped to a new file as in the previous example.Commands in the array_demo.pbs script refer to the job’s array index to differentiate between the copies.

#PBS -o Demo^array_index^/stdout #PBS -e Demo^array_index^/stderrprintf "This is job number $PBS_ARRAY_INDEX in the array\n"

Submit the job and then monitor it with qstat -t:

qstat -t 2597100[]

[jdar4135@login1 Demo-b]$ qstat -t 2597100[]

Job id Name User Time Use S Queue

---------------- ---------------- ---------------- -------- - -----

2597100[].pbsserv DemoHayB jdar4135 0 B small-express

2597100[1].pbsser DemoHayB jdar4135 00:00:00 R small-express

2597100[2].pbsser DemoHayB jdar4135 00:00:00 R small-express

2597100[3].pbsser DemoHayB jdar4135 00:00:00 R small-express

2597100[4].pbsser DemoHayB jdar4135 00:00:00 R small-express

2597100[5].pbsser DemoHayB jdar4135 00:00:00 R small-express

2597100[6].pbsser DemoHayB jdar4135 00:00:00 R small-express

2597100[7].pbsser DemoHayB jdar4135 00:00:00 R small-express

2597100[8].pbsser DemoHayB jdar4135 00:00:00 R small-express

2597100[9].pbsser DemoHayB jdar4135 00:00:00 R small-express

2597100[10].pbsse DemoHayB jdar4135 00:00:00 R small-express

Practice checking an individual subjob:

[jdar4135@login1 Demo-b]$ qstat -t 2597100[7]

Job id Name User Time Use S Queue

---------------- ---------------- ---------------- -------- - -----

2597100[7].pbsser DemoHayB jdar4135 00:00:00 R small-express

The array_state_count

Information about subjobs can also be found in the job’s ‘full display’ -f status report:

qstat -f 2597100[] | grep array

[jdar4135@login1 Demo-b]$ qstat -f 2597100[] | grep array

Submit_arguments = array_demo.pbs

array = True

array_state_count = Queued:0 Running:10 Exiting:0 Expired:0

array_indices_submitted = 1-10

The array_state_count entry is an especially useful way to see how many of your subjobs are in which state.

Once your jobs are done, check for errors – but wait, there are no log files!

[jdar4135@login1 Demo-b]$ ls

array_demo.pbs Demo1 Demo10 Demo2 Demo3 Demo4 Demo5 Demo6 Demo7 Demo8 Demo9

But there are three files in each of the new folders created:

[jdar4135@login1 Demo-b]$ ls Demo2

stderr stdout stdout_usage

And they are in fact the PBS log files, just named and saved differently.

cat Demo2/*

[jdar4135@login1 Demo-b]$ cat Demo2/*

This is job number 2 in the array

-- Job Summary -------------------------------------------------------

Job Id: 2597100[2].pbsserver for user jdar4135 in queue small-express

Job Name: DemoHayB

Project: RDS-CORE-Training-RW

Exit Status: 0

Job run as chunks (hpc056:ncpus=1:mem=1048576kb)

Array id: 2597100[].pbsserver array index: 2

Walltime requested: 00:00:20 : Walltime used: 00:00:03

: walltime percent: 15.0%

-- Nodes Summary -----------------------------------------------------

-- node hpc056 summary

Cpus requested: 1 : Cpus Used: unknown

Cpu Time: unknown : Cpu percent: unknown

Mem requested: 1.0GB : Mem used: unknown

: Mem percent: unknown

-- WARNINGS ----------------------------------------------------------

** Low Walltime utilisation. While this may be normal, it may help to check the

** following:

** Did the job parameters specify more walltime than necessary? Requesting

** lower walltime could help your job to start sooner.

** Did your analysis complete as expected or did it crash before completing?

** Did the application run more quickly than it should have? Is this analysis

** the one you intended to run?

**

-- End of Job Summary ------------------------------------------------

Or to see all the outputs:

cat Demo*/stdout

[jdar4135@login1 Demo-b]$ cat Demo*/stdout

This is job number 10 in the array

This is job number 1 in the array

This is job number 2 in the array

This is job number 3 in the array

This is job number 4 in the array

This is job number 5 in the array

This is job number 6 in the array

This is job number 7 in the array

This is job number 8 in the array

This is job number 9 in the array

The PBS directives -o and -e in our array_demo.pbs script told the scheduler how to name our standard output and standard error logs:

#PBS -o Demo^array_index^/stdout

#PBS -e Demo^array_index^/stderr

In our case, we saved log files called stdout and stderr in folders called DemoN, where N is the array index of the job. We didn’t use the Bash variable syntax $PBS_ARRAY_INDEX because these lines are PBS directives not Bash commands. Instead, PBS has a special syntax of it’s own ^array_index^ for referring to the index of each array subjob inside a directive.

Notes

1↩As you should recall from the Introduction to Artemis HPC course, the scheduler is the software that runs the cluster, allocating jobs to physical compute resources. Artemis HPC provides us with a separate ‘mini-cluster’ for Training, which has a separate PBS scheduler instance and dedicated resources.

Key Points

Arrays allow you to submit multiple similar jobs in one script

Submit an array job with option

-J i-f:sMonitor the queue with

qstat -t jobid[]The

^array_index^construction can be used inside PBS directivesThe

$PBS_ARRAY_INDEXconstruction is used elsewhere in your PBS script