Efficient Python methods

Questions

- “Which method for acceleration should I choose?”

- “How do I utilise traditional python approaches to multi cpu and nodes”

Objectives

- “Learn simples methods to profile your code”

- “See how numpy and pandas use Vectorising to improve performance for some data”

- “Use MPI to communicate between workers”

- “Discover python multiprocessing and mpi execution”

In this session we will show you a few of the basic tools that we can use in Python to make our code go faster. There is no perfect method for optimising code. Efficiency gains depend on what your end goal is, what libraries are available, what method or approach you want to take when writing algorithms, what your data is like, what hardware you have. Hopefully these notes will allow you to think about your problems from different perspectives to give you the best opportunity to make your development and execution as efficient as possible.

Acceleration, Parallelisation, Vectorising, Threading, make-Python-go-fast

We will cover a few of the ways that you can potentially speed up Python. As we will learn there are multitudes of methods to make Python code more efficient, and also different implementations of libraries, tools, techniques that can all be utilised depending on how your code and/or data is organised. This is a rich and evolving ecosystem and there is no one perfect way to implement efficiencies.

Some key words that might come up:

- Vectorisation

- MPI message parsing interface

- CPU, core, node, thread, process, worker, job, task

- Parallelisation

- Python decorators and functional programming.

What does parallel mean?

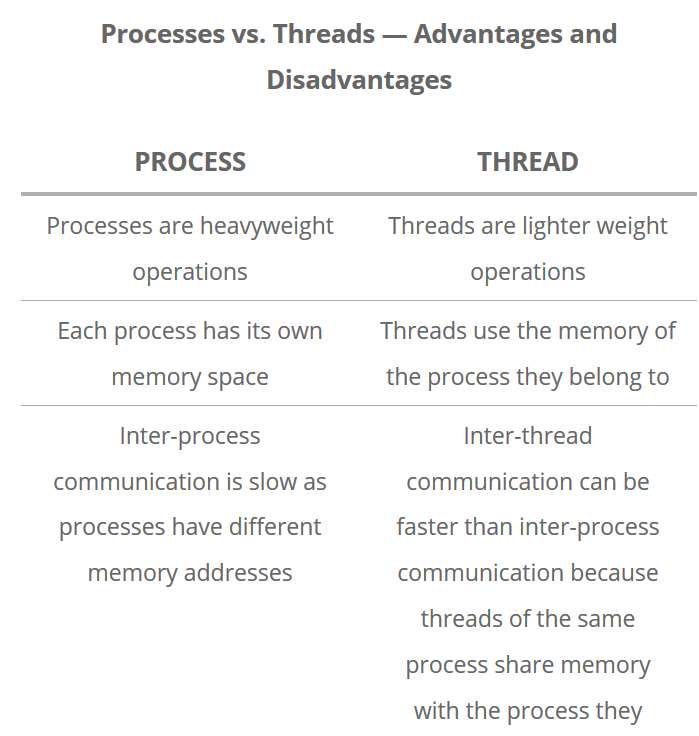

Separate workers or processes acting in an independent or semi-dependent manner. Independent processes ship data, program files and libraries to an isolated ecosystem where computation is performed. In this case communication between workers can be achieved. Contrastingly there are also shared memory set ups where multiple computational resources are pooled together to work on the same data.

Generally speaking, parallel workflows fit different categories of data handling which can make you think about how to write your code and what approaches to take.

Embarrassingly parallel:

Requires no communication between processors. Utilise shared memory spaces. For example:

- Running same algorithm for a range of input parameters.

- Rendering video frames in computer animation.

- Open MP implementations.

Coarse/Fine-grained parallel:

Requires occasional or frequent communication between processors. Uses a small number of processes on large data. Fine grain uses a large number of small processes with very little communication. But can improves computationally bound problems. For example:

- Some examples are

- Finite difference time-stepping on parallel grid.

- Finite element methods.

- Parallel tempering and MCMC.

- MPI implementations.

Traditional implementations of parallelism are done on a low level. However, open source software has evolved dramatically over the last few years allowing more high level implementations and concise ‘pythonic’ syntax that wraps around low level tools.

Profiling your code

Before you get stuck into making things fast, it is important to find out what is exactly slow in your code. Is it a particular function running slow? Or are you calling a really fast function a million times? You can save yourself a lot development time by profiling your code to give you an idea for where efficiencies can be found. Try out Jupyter’s %%timeit magic function.

%%time

import time

time.sleep(1)Wall time: 1.01 sA neat little feature to check how fast some of your cells are running. Now let’s profile a simple Python script and then think about how we could make it faster. Put this code in a script (save it as faster.py):

#A test function to see how you can profile code for speedups

import time

def waithere():

print("waiting for 1 second")

time.sleep(1)

def add2(a=0,b=0):

print("adding", a, "and", b)

return(a+b)

def main():

print("Hello, try timing some parts of this code!")

waithere()

add2(4,7)

add2(3,1)

if __name__=='__main__':

main()Hello, try timing some parts of this code!

waiting for 1 second

adding 4 and 7

adding 3 and 1There are several ways to debug and profile Python, a very elegant and built in one is cProfile It analyses your code as it executes. Run it with python -m cProfile faster.py and see the output of the script and the profiling:

Hello, try timing some parts of this code!

waiting for 1 second

adding 4 and 7

adding 3 and 1

12 function calls in 1.008 seconds

Ordered by: standard name

ncalls tottime percall cumtime percall filename:lineno(function)

1 0.000 0.000 1.008 1.008 faster.py:12(main)

1 0.000 0.000 1.008 1.008 faster.py:2(<module>)

1 0.000 0.000 1.002 1.002 faster.py:4(waithere)

2 0.000 0.000 0.005 0.003 faster.py:8(add2)

1 0.000 0.000 1.008 1.008 {built-in method builtins.exec}

4 0.007 0.002 0.007 0.002 {built-in method builtins.print}

1 1.001 1.001 1.001 1.001 {built-in method time.sleep}

1 0.000 0.000 0.000 0.000 {method 'disable' of '_lsprof.Profiler' objects}You can now interrogate your code and see where you should devote your time to improving it.

Special note on style: Developing python software in a modular manner assists with debugging and time profiling. This is a bit different to the sequential notebooks we have been creating. But re-writing certain snippets in self-contained functions can be a fun task.

Exercise

Revisit some of the codes we have run previously. You can do this from a Jupyter Notebook by clicking File > Downlad as > Python (.py). You may need to comment-out some sections, espiecially where figures are displayed, to get them to run. Run it using cProfile and look at the results. Can you identify where improvements could be made?

Loops and vectorising code with numpy and pandas

Your problem might be solved by using the fast way certain packages handle certain datatypes.

Generally speaking, pandas and numpy libraries should be libraries you frequently use. They offer advantages in high performance computing including: 1. Efficient datastructures that under the hood are implemented in fast C code rather than python. 2. Promoting explicit use of datatype declarations - making memory management of data and functions working on this data, faster. 3. Elegant syntax promoting consice behaviour. 4. Data structures come with common built in functions that are designed to be used in a vectorised way.

Lets explore this last point on vectorisation with an example. Take this nested for loop example:

#import packages

import numpy as np

import pandas as pd

import time

#Create some fake data to work with

Samples = pd.DataFrame(np.random.randint(0,100,size=(1000, 4)), columns=list(['Alpha','Beta','Gamma','Delta']))

Wells = pd.DataFrame(np.random.randint(0,100,size=(50, 1)), columns=list(['Alpha']))

#This could perhaps be the id of a well and the list of samples found in the well.

#You want to match up the samples with some other list,

#perhaps the samples from some larger database like PetDB.

#Create an emtpy dataframe to fill with the resulting matches

totalSlow=pd.DataFrame(columns=Samples.columns)

totalFast=pd.DataFrame(columns=Samples.columns)#Now compare the nested for-loop method:

tic=time.time()

for index,samp in Samples.iterrows():

for index2,well in Wells.iterrows():

if well['Alpha']==samp['Alpha']:

totalSlow=totalSlow.append(samp,ignore_index=True)

totalSlow=totalSlow.drop_duplicates()

toc=time.time()

print("Nested-loop Runtime:",toc-tic, "seconds")

Nested-loop Runtime: 4.68686056137085 seconds#Or the vectorised method:

tic=time.time()

totalFast=Samples[Samples['Alpha'].isin(Wells.Alpha.tolist())]

totalFast=totalSlow.drop_duplicates()

toc=time.time()

print("Vectorized Runtime:",toc-tic, "seconds")

Vectorized Runtime: 0.00400090217590332 secondsWhich one is faster? Note the use of some really basic timing functions, these can help you understand the speed of your code.

Python Multiprocessing

From within Python you may need a flexible way to manage computational resources. This is traditionally done with the multiprocessing library.

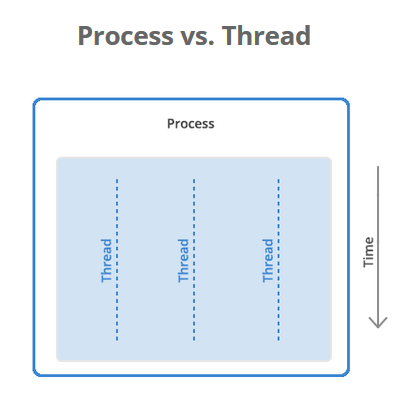

With multiprocessing, Python creates new processes. A process here can be thought of as almost a completely different program, though technically they are usually defined as a collection of resources where the resources include memory, file handles and things like that.

One way to think about it is that each process runs in its own Python interpreter, and multiprocessing farms out parts of your program to run on each process.

Simple multiprocessing example

Some basic concepts in the multiprocessing library are: 1. the Pool(processes) object creates a pool of processes. processes is the number of worker processes to use (i.e Python interpreters). If processes is None then the number returned by os.cpu_count() is used. 2. The map(function,list) attribute of this object uses the pool to map a defined function to a list/iterator object

To implement multiprocessing in its basic form. You can complete this exercise in a notebook or a script.

#Import the libraries we will need

import shapefile

import numpy as np

import matplotlib.pyplot as plt

import multiprocessing

import time

#Check how many cpus are availble on your computer

multiprocessing.cpu_count()

#Read in the shapefile that we will use

sf = shapefile.Reader("../data/platepolygons/topology_platepolygons_0.00Ma.shp")

recs = sf.records()

shapes = sf.shapes()

fields = sf.fields

Nshp = len(shapes)

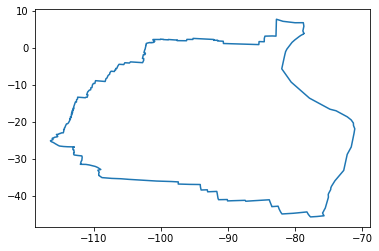

polygons=np.arange(Nshp)#Get some details about the shapefile. Plot one of the polygons.

#Let us find the areas of each of the polygons in the shapefile.

print(recs[10])

polygonShape=shapes[10].points

poly=np.array(polygonShape)

plt.plot(poly[:,0],poly[:,1])Record #10: [0, 0.0, 'Global_EarthByte_230-0Ma_GK07_AREPS_PlateBoundaries.gpml', 'Global_EarthByte_230-0Ma_GK07_AREPS.rot', 911, '', 'gpml:TopologicalClosedPlateBoundary', 0.9, 0.0, 'Nazca Plate', '', 'GPlates-ef2e06a7-4086-4062-9df6-1fa3133f50b8', 0, '', 0, 0, 0.0]

[<matplotlib.lines.Line2D at 0x2b29bc7ed08>]

png

#Implement a function to calcualte the area of a polygon.

# Area of Polygon using Shoelace formula

# http://en.wikipedia.org/wiki/Shoelace_formula

def PolygonArea(nshp):

start_time = time.time()

polygonShape=shapes[nshp].points

corners=np.array(polygonShape)

n = len(corners) # of corners

#Area calculation using Shoelace forula

area = 0.0

for i in range(n):

j = (i + 1) % n

area += corners[i][0] * corners[j][1]

area -= corners[j][0] * corners[i][1]

area = abs(area) / 2.0

time.sleep(0.2)

endtime=time.time() - start_time

print("Process {} Finished in {:0.4f}s.".format(nshp,endtime))

return(area)#Run the function for each polygon/plate in the shapefile:

start_time = time.time()

Areas1=[]

for i in polygons:

Areas1.append(PolygonArea(i))

print("Final Runtime", time.time() - start_time)Process 0 Finished in 0.2019s.

Process 1 Finished in 0.2133s.

Process 2 Finished in 0.2097s.

Process 3 Finished in 0.2137s.

Process 4 Finished in 0.2162s.

Process 5 Finished in 0.2106s.

Process 6 Finished in 0.2134s.

Process 7 Finished in 0.2145s.

Process 8 Finished in 0.2117s.

Process 9 Finished in 0.2123s.

Process 10 Finished in 0.2124s.

Process 11 Finished in 0.2139s.

Process 12 Finished in 0.2111s.

Process 13 Finished in 0.2107s.

Process 14 Finished in 0.2138s.

Process 15 Finished in 0.2114s.

Process 16 Finished in 0.2107s.

Process 17 Finished in 0.2134s.

Process 18 Finished in 0.2119s.

Process 19 Finished in 0.2114s.

Process 20 Finished in 0.2129s.

Process 21 Finished in 0.2143s.

Process 22 Finished in 0.2148s.

Process 23 Finished in 0.2125s.

Process 24 Finished in 0.2122s.

Process 25 Finished in 0.2105s.

Process 26 Finished in 0.2138s.

Process 27 Finished in 0.2164s.

Process 28 Finished in 0.2122s.

Process 29 Finished in 0.2112s.

Process 30 Finished in 0.2109s.

Process 31 Finished in 0.2132s.

Process 32 Finished in 0.2136s.

Process 33 Finished in 0.2133s.

Process 34 Finished in 0.2112s.

Process 35 Finished in 0.2135s.

Process 36 Finished in 0.2132s.

Process 37 Finished in 0.2141s.

Process 38 Finished in 0.2104s.

Process 39 Finished in 0.2117s.

Process 40 Finished in 0.2124s.

Process 41 Finished in 0.2125s.

Process 42 Finished in 0.2128s.

Process 43 Finished in 0.2100s.

Process 44 Finished in 0.2148s.

Process 45 Finished in 0.2138s.

Final Runtime 9.796719074249268Now we will have to run the multiprocessing version outside of our jupyter environment. Put the following into a script or download the full version here.

#Put this snippet in a code block outside of Jupyter, but this time, use the multiprocessing capabilities

#Because of how the multiprocessing works, it does not behave nicely in Jupyter all the time

def make_global(shapes):

global gshapes

gshapes = shapes

start_time = time.time()

with multiprocessing.Pool(initializer=make_global, initargs=(shapes,)) as pool:

Areas2 = pool.map(PolygonArea,polygons)

print("Final Runtime", time.time() - start_time)Process 0 Finished in 0.2148s.

Process 1 Finished in 0.2199s.

Process 30 Finished in 0.2136s.

Process 31 Finished in 0.2135s.

Process 4 Finished in 0.2138s.

Process 5 Finished in 0.2047s.

Process 24 Finished in 0.2137s.

Process 25 Finished in 0.2138s.

Process 34 Finished in 0.2116s.

Process 35 Finished in 0.2124s.

Process 6 Finished in 0.2138s.

Process 7 Finished in 0.2047s.

Process 16 Finished in 0.2137s.

Process 17 Finished in 0.2138s.

Process 38 Finished in 0.2106s.

Process 39 Finished in 0.2124s.

Process 8 Finished in 0.2138s.

Process 9 Finished in 0.2047s.

Process 26 Finished in 0.2137s.

Process 27 Finished in 0.2138s.

Process 42 Finished in 0.2106s.

Process 43 Finished in 0.2124s.

Process 12 Finished in 0.2138s.

Process 13 Finished in 0.2047s.

Process 20 Finished in 0.2137s.

Process 21 Finished in 0.2138s.

Process 32 Finished in 0.2116s.

Process 33 Finished in 0.2124s.

Process 14 Finished in 0.2138s.

Process 15 Finished in 0.2037s.

Process 22 Finished in 0.2137s.

Process 23 Finished in 0.2138s.

Process 40 Finished in 0.2106s.

Process 41 Finished in 0.2124s.

Process 2 Finished in 0.2138s.

Process 3 Finished in 0.2047s.

Process 28 Finished in 0.2137s.

Process 29 Finished in 0.2138s.

Process 44 Finished in 0.2096s.

Process 45 Finished in 0.2124s.

Process 10 Finished in 0.2138s.

Process 11 Finished in 0.2047s.

Process 18 Finished in 0.2137s.

Process 19 Finished in 0.2138s.

Process 36 Finished in 0.2116s.

Process 37 Finished in 0.2124s.

Final Runtime 5.4170615673065186Is there any speed up? Why are the processes not in order? Is there any overhead?

Challenge.

- The pyshp/shapefile library contains the function

signed_areawhich can calculate the area of a polygon. Replace the calculation of area using the shoelace formula in thePolygonAreafunction with thesigned_areamethod:

area=shapefile.signed_area(corners)- How does this change the timings of your speed tests? Hint, it might not be by much.

Solution

def PolygonArea(nshp):

start_time = time.time()

area=shapefile.signed_area(corners)

endtime=time.time() - start_time

print("Process {} Finished in {:0.4f}s. \n".format(nshp,endtime))

return(area)#Test the serial version

start_time = time.time()

Areas1=[]

for i in polygons:

Areas1.append(PolygonArea(i))

print("Final Runtime", time.time() - start_time)

#Test the multiprocessing version

start_time = time.time()

with multiprocessing.Pool(initializer=make_global, initargs=(shapes,)) as pool:

Areas2 = pool.map(PolygonArea,polygons)

print("Final Runtime", time.time() - start_time)

There is generally a sweet spot in how many processes you create to optimise the run time. A large number of python processes is generally not advisable, as it involves a large fixed cost in setting up many python interpreters and its supporting infrastructure. Play around with different numbers of processes in the pool(processes) statement to see how the runtime varies. Also, well developed libraries often have nicely optimised algorithms, you may not have to reinvent the wheel (Haha at this Python pun).

Useful links

https://realpython.com/python-concurrency/

https://docs.python.org/3/library/multiprocessing.html

https://www.backblaze.com/blog/whats-the-diff-programs-processes-and-threads/

MPI: Message Passing Interface

MPI is a standardized and portable message-passing system designed to function on a wide variety of parallel computers. The standard defines the syntax and semantics of a core of library routines useful to a wide range of users writing portable message-passing programs in C, C++, and Fortran. There are several well-tested and efficient implementations of MPI, many of which are open-source or in the public domain.

MPI for Python, found in mpi4py, provides bindings of the MPI standard for the Python programming language, allowing any Python program to exploit multiple processors. This simple code demonstrates the collection of resources and how code is run on different processes:

#!pip install mpi4py

#Run with:

#mpiexec -np 4 python mpi.py

from mpi4py import MPI

comm = MPI.COMM_WORLD

size = comm.Get_size()

rank = comm.Get_rank()

print("I am rank %d in group of %d processes." % (rank, size))I am rank 0 in group of 1 processes.#Want more help on the MPI.COMM_WORLD class object?

#See the methods, functions, and variables associated with it.

help(comm)Help on Intracomm object:

class Intracomm(Comm)

| Intracommunicator

|

| Method resolution order:

| Intracomm

| Comm

| builtins.object

|

| Methods defined here:

|

| Accept(...)

| Intracomm.Accept(self, port_name, Info info=INFO_NULL, int root=0)

|

| Accept a request to form a new intercommunicator

|

| Cart_map(...)

| Intracomm.Cart_map(self, dims, periods=None)

|

| Return an optimal placement for the

| calling process on the physical machine

|

| Connect(...)

| Intracomm.Connect(self, port_name, Info info=INFO_NULL, int root=0)

|

| Make a request to form a new intercommunicator

|

| Create_cart(...)

| Intracomm.Create_cart(self, dims, periods=None, bool reorder=False)

|

| Create cartesian communicator

|

| Create_dist_graph(...)

| Intracomm.Create_dist_graph(self, sources, degrees, destinations, weights=None, Info info=INFO_NULL, bool reorder=False)

|

| Create distributed graph communicator

|

| Create_dist_graph_adjacent(...)

| Intracomm.Create_dist_graph_adjacent(self, sources, destinations, sourceweights=None, destweights=None, Info info=INFO_NULL, bool reorder=False)

|

| Create distributed graph communicator

|

| Create_graph(...)

| Intracomm.Create_graph(self, index, edges, bool reorder=False)

|

| Create graph communicator

|

| Create_intercomm(...)

| Intracomm.Create_intercomm(self, int local_leader, Intracomm peer_comm, int remote_leader, int tag=0)

|

| Create intercommunicator

|

| Exscan(...)

| Intracomm.Exscan(self, sendbuf, recvbuf, Op op=SUM)

|

| Exclusive Scan

|

| Graph_map(...)

| Intracomm.Graph_map(self, index, edges)

|

| Return an optimal placement for the

| calling process on the physical machine

|

| Iexscan(...)

| Intracomm.Iexscan(self, sendbuf, recvbuf, Op op=SUM)

|

| Inclusive Scan

|

| Iscan(...)

| Intracomm.Iscan(self, sendbuf, recvbuf, Op op=SUM)

|

| Inclusive Scan

|

| Scan(...)

| Intracomm.Scan(self, sendbuf, recvbuf, Op op=SUM)

|

| Inclusive Scan

|

| Spawn(...)

| Intracomm.Spawn(self, command, args=None, int maxprocs=1, Info info=INFO_NULL, int root=0, errcodes=None)

|

| Spawn instances of a single MPI application

|

| Spawn_multiple(...)

| Intracomm.Spawn_multiple(self, command, args=None, maxprocs=None, info=INFO_NULL, int root=0, errcodes=None)

|

| Spawn instances of multiple MPI applications

|

| exscan(...)

| Intracomm.exscan(self, sendobj, op=SUM)

| Exclusive Scan

|

| scan(...)

| Intracomm.scan(self, sendobj, op=SUM)

| Inclusive Scan

|

| ----------------------------------------------------------------------

| Static methods defined here:

|

| __new__(*args, **kwargs) from builtins.type

| Create and return a new object. See help(type) for accurate signature.

|

| ----------------------------------------------------------------------

| Methods inherited from Comm:

|

| Abort(...)

| Comm.Abort(self, int errorcode=0)

|

| Terminate MPI execution environment

|

| .. warning:: This is a direct call, use it with care!!!.

|

| Allgather(...)

| Comm.Allgather(self, sendbuf, recvbuf)

|

| Gather to All, gather data from all processes and

| distribute it to all other processes in a group

|

| Allgatherv(...)

| Comm.Allgatherv(self, sendbuf, recvbuf)

|

| Gather to All Vector, gather data from all processes and

| distribute it to all other processes in a group providing

| different amount of data and displacements

|

| Allreduce(...)

| Comm.Allreduce(self, sendbuf, recvbuf, Op op=SUM)

|

| All Reduce

|

| Alltoall(...)

| Comm.Alltoall(self, sendbuf, recvbuf)

|

| All to All Scatter/Gather, send data from all to all

| processes in a group

|

| Alltoallv(...)

| Comm.Alltoallv(self, sendbuf, recvbuf)

|

| All to All Scatter/Gather Vector, send data from all to all

| processes in a group providing different amount of data and

| displacements

|

| Alltoallw(...)

| Comm.Alltoallw(self, sendbuf, recvbuf)

|

| Generalized All-to-All communication allowing different

| counts, displacements and datatypes for each partner

|

| Barrier(...)

| Comm.Barrier(self)

|

| Barrier synchronization

|

| Bcast(...)

| Comm.Bcast(self, buf, int root=0)

|

| Broadcast a message from one process

| to all other processes in a group

|

| Bsend(...)

| Comm.Bsend(self, buf, int dest, int tag=0)

|

| Blocking send in buffered mode

|

| Bsend_init(...)

| Comm.Bsend_init(self, buf, int dest, int tag=0)

|

| Persistent request for a send in buffered mode

|

| Call_errhandler(...)

| Comm.Call_errhandler(self, int errorcode)

|

| Call the error handler installed on a communicator

|

| Clone(...)

| Comm.Clone(self)

|

| Clone an existing communicator

|

| Create(...)

| Comm.Create(self, Group group)

|

| Create communicator from group

|

| Create_group(...)

| Comm.Create_group(self, Group group, int tag=0)

|

| Create communicator from group

|

| Delete_attr(...)

| Comm.Delete_attr(self, int keyval)

|

| Delete attribute value associated with a key

|

| Disconnect(...)

| Comm.Disconnect(self)

|

| Disconnect from a communicator

|

| Dup(...)

| Comm.Dup(self, Info info=None)

|

| Duplicate an existing communicator

|

| Dup_with_info(...)

| Comm.Dup_with_info(self, Info info)

|

| Duplicate an existing communicator

|

| Free(...)

| Comm.Free(self)

|

| Free a communicator

|

| Gather(...)

| Comm.Gather(self, sendbuf, recvbuf, int root=0)

|

| Gather together values from a group of processes

|

| Gatherv(...)

| Comm.Gatherv(self, sendbuf, recvbuf, int root=0)

|

| Gather Vector, gather data to one process from all other

| processes in a group providing different amount of data and

| displacements at the receiving sides

|

| Get_attr(...)

| Comm.Get_attr(self, int keyval)

|

| Retrieve attribute value by key

|

| Get_errhandler(...)

| Comm.Get_errhandler(self)

|

| Get the error handler for a communicator

|

| Get_group(...)

| Comm.Get_group(self)

|

| Access the group associated with a communicator

|

| Get_info(...)

| Comm.Get_info(self)

|

| Return the hints for a communicator

| that are currently in use

|

| Get_name(...)

| Comm.Get_name(self)

|

| Get the print name for this communicator

|

| Get_rank(...)

| Comm.Get_rank(self)

|

| Return the rank of this process in a communicator

|

| Get_size(...)

| Comm.Get_size(self)

|

| Return the number of processes in a communicator

|

| Get_topology(...)

| Comm.Get_topology(self)

|

| Determine the type of topology (if any)

| associated with a communicator

|

| Iallgather(...)

| Comm.Iallgather(self, sendbuf, recvbuf)

|

| Nonblocking Gather to All

|

| Iallgatherv(...)

| Comm.Iallgatherv(self, sendbuf, recvbuf)

|

| Nonblocking Gather to All Vector

|

| Iallreduce(...)

| Comm.Iallreduce(self, sendbuf, recvbuf, Op op=SUM)

|

| Nonblocking All Reduce

|

| Ialltoall(...)

| Comm.Ialltoall(self, sendbuf, recvbuf)

|

| Nonblocking All to All Scatter/Gather

|

| Ialltoallv(...)

| Comm.Ialltoallv(self, sendbuf, recvbuf)

|

| Nonblocking All to All Scatter/Gather Vector

|

| Ialltoallw(...)

| Comm.Ialltoallw(self, sendbuf, recvbuf)

|

| Nonblocking Generalized All-to-All

|

| Ibarrier(...)

| Comm.Ibarrier(self)

|

| Nonblocking Barrier

|

| Ibcast(...)

| Comm.Ibcast(self, buf, int root=0)

|

| Nonblocking Broadcast

|

| Ibsend(...)

| Comm.Ibsend(self, buf, int dest, int tag=0)

|

| Nonblocking send in buffered mode

|

| Idup(...)

| Comm.Idup(self)

|

| Nonblocking duplicate an existing communicator

|

| Igather(...)

| Comm.Igather(self, sendbuf, recvbuf, int root=0)

|

| Nonblocking Gather

|

| Igatherv(...)

| Comm.Igatherv(self, sendbuf, recvbuf, int root=0)

|

| Nonblocking Gather Vector

|

| Improbe(...)

| Comm.Improbe(self, int source=ANY_SOURCE, int tag=ANY_TAG, Status status=None)

|

| Nonblocking test for a matched message

|

| Iprobe(...)

| Comm.Iprobe(self, int source=ANY_SOURCE, int tag=ANY_TAG, Status status=None)

|

| Nonblocking test for a message

|

| Irecv(...)

| Comm.Irecv(self, buf, int source=ANY_SOURCE, int tag=ANY_TAG)

|

| Nonblocking receive

|

| Ireduce(...)

| Comm.Ireduce(self, sendbuf, recvbuf, Op op=SUM, int root=0)

|

| Nonblocking Reduce

|

| Ireduce_scatter(...)

| Comm.Ireduce_scatter(self, sendbuf, recvbuf, recvcounts=None, Op op=SUM)

|

| Nonblocking Reduce-Scatter (vector version)

|

| Ireduce_scatter_block(...)

| Comm.Ireduce_scatter_block(self, sendbuf, recvbuf, Op op=SUM)

|

| Nonblocking Reduce-Scatter Block (regular, non-vector version)

|

| Irsend(...)

| Comm.Irsend(self, buf, int dest, int tag=0)

|

| Nonblocking send in ready mode

|

| Is_inter(...)

| Comm.Is_inter(self)

|

| Test to see if a comm is an intercommunicator

|

| Is_intra(...)

| Comm.Is_intra(self)

|

| Test to see if a comm is an intracommunicator

|

| Iscatter(...)

| Comm.Iscatter(self, sendbuf, recvbuf, int root=0)

|

| Nonblocking Scatter

|

| Iscatterv(...)

| Comm.Iscatterv(self, sendbuf, recvbuf, int root=0)

|

| Nonblocking Scatter Vector

|

| Isend(...)

| Comm.Isend(self, buf, int dest, int tag=0)

|

| Nonblocking send

|

| Issend(...)

| Comm.Issend(self, buf, int dest, int tag=0)

|

| Nonblocking send in synchronous mode

|

| Mprobe(...)

| Comm.Mprobe(self, int source=ANY_SOURCE, int tag=ANY_TAG, Status status=None)

|

| Blocking test for a matched message

|

| Probe(...)

| Comm.Probe(self, int source=ANY_SOURCE, int tag=ANY_TAG, Status status=None)

|

| Blocking test for a message

|

| .. note:: This function blocks until the message arrives.

|

| Recv(...)

| Comm.Recv(self, buf, int source=ANY_SOURCE, int tag=ANY_TAG, Status status=None)

|

| Blocking receive

|

| .. note:: This function blocks until the message is received

|

| Recv_init(...)

| Comm.Recv_init(self, buf, int source=ANY_SOURCE, int tag=ANY_TAG)

|

| Create a persistent request for a receive

|

| Reduce(...)

| Comm.Reduce(self, sendbuf, recvbuf, Op op=SUM, int root=0)

|

| Reduce

|

| Reduce_scatter(...)

| Comm.Reduce_scatter(self, sendbuf, recvbuf, recvcounts=None, Op op=SUM)

|

| Reduce-Scatter (vector version)

|

| Reduce_scatter_block(...)

| Comm.Reduce_scatter_block(self, sendbuf, recvbuf, Op op=SUM)

|

| Reduce-Scatter Block (regular, non-vector version)

|

| Rsend(...)

| Comm.Rsend(self, buf, int dest, int tag=0)

|

| Blocking send in ready mode

|

| Rsend_init(...)

| Comm.Rsend_init(self, buf, int dest, int tag=0)

|

| Persistent request for a send in ready mode

|

| Scatter(...)

| Comm.Scatter(self, sendbuf, recvbuf, int root=0)

|

| Scatter data from one process

| to all other processes in a group

|

| Scatterv(...)

| Comm.Scatterv(self, sendbuf, recvbuf, int root=0)

|

| Scatter Vector, scatter data from one process to all other

| processes in a group providing different amount of data and

| displacements at the sending side

|

| Send(...)

| Comm.Send(self, buf, int dest, int tag=0)

|

| Blocking send

|

| .. note:: This function may block until the message is

| received. Whether or not `Send` blocks depends on

| several factors and is implementation dependent

|

| Send_init(...)

| Comm.Send_init(self, buf, int dest, int tag=0)

|

| Create a persistent request for a standard send

|

| Sendrecv(...)

| Comm.Sendrecv(self, sendbuf, int dest, int sendtag=0, recvbuf=None, int source=ANY_SOURCE, int recvtag=ANY_TAG, Status status=None)

|

| Send and receive a message

|

| .. note:: This function is guaranteed not to deadlock in

| situations where pairs of blocking sends and receives may

| deadlock.

|

| .. caution:: A common mistake when using this function is to

| mismatch the tags with the source and destination ranks,

| which can result in deadlock.

|

| Sendrecv_replace(...)

| Comm.Sendrecv_replace(self, buf, int dest, int sendtag=0, int source=ANY_SOURCE, int recvtag=ANY_TAG, Status status=None)

|

| Send and receive a message

|

| .. note:: This function is guaranteed not to deadlock in

| situations where pairs of blocking sends and receives may

| deadlock.

|

| .. caution:: A common mistake when using this function is to

| mismatch the tags with the source and destination ranks,

| which can result in deadlock.

|

| Set_attr(...)

| Comm.Set_attr(self, int keyval, attrval)

|

| Store attribute value associated with a key

|

| Set_errhandler(...)

| Comm.Set_errhandler(self, Errhandler errhandler)

|

| Set the error handler for a communicator

|

| Set_info(...)

| Comm.Set_info(self, Info info)

|

| Set new values for the hints

| associated with a communicator

|

| Set_name(...)

| Comm.Set_name(self, name)

|

| Set the print name for this communicator

|

| Split(...)

| Comm.Split(self, int color=0, int key=0)

|

| Split communicator by color and key

|

| Split_type(...)

| Comm.Split_type(self, int split_type, int key=0, Info info=INFO_NULL)

|

| Split communicator by color and key

|

| Ssend(...)

| Comm.Ssend(self, buf, int dest, int tag=0)

|

| Blocking send in synchronous mode

|

| Ssend_init(...)

| Comm.Ssend_init(self, buf, int dest, int tag=0)

|

| Persistent request for a send in synchronous mode

|

| __bool__(self, /)

| self != 0

|

| __eq__(self, value, /)

| Return self==value.

|

| __ge__(self, value, /)

| Return self>=value.

|

| __gt__(self, value, /)

| Return self>value.

|

| __le__(self, value, /)

| Return self<=value.

|

| __lt__(self, value, /)

| Return self<value.

|

| __ne__(self, value, /)

| Return self!=value.

|

| allgather(...)

| Comm.allgather(self, sendobj)

| Gather to All

|

| allreduce(...)

| Comm.allreduce(self, sendobj, op=SUM)

| Reduce to All

|

| alltoall(...)

| Comm.alltoall(self, sendobj)

| All to All Scatter/Gather

|

| barrier(...)

| Comm.barrier(self)

| Barrier

|

| bcast(...)

| Comm.bcast(self, obj, int root=0)

| Broadcast

|

| bsend(...)

| Comm.bsend(self, obj, int dest, int tag=0)

| Send in buffered mode

|

| gather(...)

| Comm.gather(self, sendobj, int root=0)

| Gather

|

| ibsend(...)

| Comm.ibsend(self, obj, int dest, int tag=0)

| Nonblocking send in buffered mode

|

| improbe(...)

| Comm.improbe(self, int source=ANY_SOURCE, int tag=ANY_TAG, Status status=None)

| Nonblocking test for a matched message

|

| iprobe(...)

| Comm.iprobe(self, int source=ANY_SOURCE, int tag=ANY_TAG, Status status=None)

| Nonblocking test for a message

|

| irecv(...)

| Comm.irecv(self, buf=None, int source=ANY_SOURCE, int tag=ANY_TAG)

| Nonblocking receive

|

| isend(...)

| Comm.isend(self, obj, int dest, int tag=0)

| Nonblocking send

|

| issend(...)

| Comm.issend(self, obj, int dest, int tag=0)

| Nonblocking send in synchronous mode

|

| mprobe(...)

| Comm.mprobe(self, int source=ANY_SOURCE, int tag=ANY_TAG, Status status=None)

| Blocking test for a matched message

|

| probe(...)

| Comm.probe(self, int source=ANY_SOURCE, int tag=ANY_TAG, Status status=None)

| Blocking test for a message

|

| py2f(...)

| Comm.py2f(self)

|

| recv(...)

| Comm.recv(self, buf=None, int source=ANY_SOURCE, int tag=ANY_TAG, Status status=None)

| Receive

|

| reduce(...)

| Comm.reduce(self, sendobj, op=SUM, int root=0)

| Reduce

|

| scatter(...)

| Comm.scatter(self, sendobj, int root=0)

| Scatter

|

| send(...)

| Comm.send(self, obj, int dest, int tag=0)

| Send

|

| sendrecv(...)

| Comm.sendrecv(self, sendobj, int dest, int sendtag=0, recvbuf=None, int source=ANY_SOURCE, int recvtag=ANY_TAG, Status status=None)

| Send and Receive

|

| ssend(...)

| Comm.ssend(self, obj, int dest, int tag=0)

| Send in synchronous mode

|

| ----------------------------------------------------------------------

| Class methods inherited from Comm:

|

| Compare(...) from builtins.type

| Comm.Compare(type cls, Comm comm1, Comm comm2)

|

| Compare two communicators

|

| Create_keyval(...) from builtins.type

| Comm.Create_keyval(type cls, copy_fn=None, delete_fn=None, nopython=False)

|

| Create a new attribute key for communicators

|

| Free_keyval(...) from builtins.type

| Comm.Free_keyval(type cls, int keyval)

|

| Free and attribute key for communicators

|

| Get_parent(...) from builtins.type

| Comm.Get_parent(type cls)

|

| Return the parent intercommunicator for this process

|

| Join(...) from builtins.type

| Comm.Join(type cls, int fd)

|

| Create a intercommunicator by joining

| two processes connected by a socket

|

| f2py(...) from builtins.type

| Comm.f2py(type cls, arg)

|

| ----------------------------------------------------------------------

| Data descriptors inherited from Comm:

|

| group

| communicator group

|

| info

| communicator info

|

| is_inter

| is intercommunicator

|

| is_intra

| is intracommunicator

|

| is_topo

| is a topology communicator

|

| name

| communicator name

|

| rank

| rank of this process in communicator

|

| size

| number of processes in communicator

|

| topology

| communicator topology type

|

| ----------------------------------------------------------------------

| Data and other attributes inherited from Comm:

|

| __hash__ = Nonekeypoints

- “Understand there are different ways to accelerate”

- “The best method depends on your algorithms, code and data”

- “load multiprocessing library to execute a function in a parallel manner”