Building Machine Learning Datasets From Scratch

All machine learning problems begin with a dataset, and before we can perform any kind of inference on that dataset we must create/wrangle/build it. This is often the most time-consuming and hard part of a successful machine learning workflow. There is no set procedure here, as all data is different, although there are a few simple methods we can take to make a useful dataset.

We will be using data from a submitted Manuscript (Butterworth and Barnett-Moore 2020) which was a finalist in the Unearthed, ExploreSA: Gawler Challenge. You can visit the original repo here.

Goal: Create a table of data containing “targets” and “predictor variables”

The targets in an ML context can be a simple binary 1 or 0, or could be some category (classification), or the value of a particular parameter (regression problems). It is the “feature” of a dataset that we want to learn something about!

The “predictor/feature variables” are the qualities/parameters that may have some causal relationship with the the target.

Step 1 - What is our target variable?

Are we classifying something?

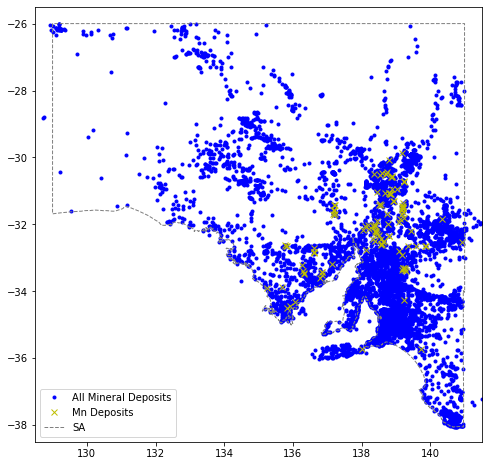

Deposit locations - mine and mineral occurances

The most important dataset for this workflow is the currently known locations of mineral occurences. Using the data we already know about these known-deposits we will build a model to predict where future occurences will be.

# For working with shapefiles (packaged is called pyshp)

import shapefile

# For working with dataframes

import pandas as pd# Set the filename

mineshape="data/MinesMinerals/mines_and_mineral_occurrences_all.shp"

# Set shapefile attributes and assign

sf = shapefile.Reader(mineshape)

fields = [x[0] for x in sf.fields][1:]

records = sf.records()

shps = [s.points for s in sf.shapes()]

# Write into a dataframe for easy use

df = pd.DataFrame(columns=fields, data=records)View the metadata of the South Australian all mines and mineral deposits to get a better understanding for what features we could use as a target.

#See what the dataframe looks like

print(df.columns)

#For clean printing to html drop columns that contains annoying / and \ chars.

#And set max columns

pd.options.display.max_columns = 8

df.drop(columns=['REFERENCE','O_MAP_SYMB'])Index(['MINDEP_NO', 'DEP_NAME', 'REFERENCE', 'COMM_CODE', 'COMMODS',

'COMMOD_MAJ', 'COMM_SPECS', 'GCHEM_ASSC', 'DISC_YEAR', 'CLASS_CODE',

'OPER_TYPE', 'MAP_SYMB', 'STATUS_VAL', 'SIZE_VAL', 'GEOL_PROV',

'DB_RES_RVE', 'DB_PROD', 'DB_DOC_IMG', 'DB_EXV_IMG', 'DB_DEP_IMG',

'DB_DEP_FLE', 'COX_CLASS', 'REG_O_CTRL', 'LOC_O_CTRL', 'LOC_O_COM',

'O_LITH_CDE', 'O_LITH01', 'O_STRAT_NM', 'H_LITH_CDE', 'H_LITH02',

'H_STRAT_NM', 'H_MAP_SYMB', 'EASTING', 'NORTHING', 'ZONE', 'LONGITUDE',

'LATITUDE', 'SVY_METHOD', 'HORZ_ACC', 'SRCE_MAP', 'SRCE_CNTRE',

'COMMENTS', 'O_MAP_SYMB'],

dtype='object')| MINDEP_NO | DEP_NAME | COMM_CODE | COMMODS | … | HORZ_ACC | SRCE_MAP | SRCE_CNTRE | COMMENTS | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 5219 | MOUNT DAVIES NO.2A | Ni | Nickel | … | 2000.0 | 500k meis | ||

| 1 | 52 | ONE STONE | Ni | Nickel | … | 500.0 | 71-385 | ||

| 2 | 8314 | HINCKLEY RANGE | Fe | Iron | … | 500.0 | |||

| 3 | 69 | KALKA | V, ILM | Vanadium, Ilmenite | … | 100.0 | 1 MILE | mgt polygon on digital map | |

| 4 | 65 | ECHIDNA | Ni | Nickel | … | 20.0 | 50K GEOL | DH ECHIDNA PROSPECT | |

| … | … | … | … | … | … | … | … | … | … |

| 8672 | 6937 | YARINGA | QTZE | Quartzite | … | 200.0 | 50k moc | fenced yard | |

| 8673 | 4729 | WELCHS | SCHT | Schist | … | 20.0 | 50k topo | ||

| 8674 | 4718 | ARCADIAN | CLAY | Clay | … | 5.0 | Plan 1951-0327 | Pit | |

| 8675 | 1436 | MCDONALD | Au | Gold | … | 200.0 | 50k moc | qz float | |

| 8676 | 8934 | FAIRFIELD FARM | SAND | Sand | … | 20.0 | pit |

8677 rows × 41 columns

#We are building a model to target South Australia, so load in a map of it.

gawlshape="data/SA/SA_STATE_POLYGON_shp"

shapeRead = shapefile.Reader(gawlshape)

shapes = shapeRead.shapes()

#Save the boundary xy pairs in arrays we will use throughout the workflow

xval = [x[0] for x in shapes[1].points]

yval = [x[1] for x in shapes[1].points]# Subset the data, for a single Mineral target

commname='Mn'

#Pull out all the occurences of the commodity and go from there

comm=df[df['COMM_CODE'].str.contains(commname)]

comm=comm.reset_index(drop=True)

print("Shape of "+ commname, comm.shape)

# Can make further subsets of the data here if needed

#commsig=comm[comm.SIZE_VAL!="Low Significance"]

#comm=comm[comm.SIZE_VAL!="Low Significance"]

#comm=comm[comm.COX_CLASS == "Olympic Dam Cu-U-Au"]

#comm=comm[(comm.lon<max(xval)) & (comm.lon>min(xval)) & (comm.lat>min(yval)) & (comm.lat<max(yval))]

Shape of Mn (115, 43)# For plotting

import matplotlib.pyplot as pltfig = plt.figure(figsize=(8,8))

ax = plt.axes()

ax.plot(df.LONGITUDE,df.LATITUDE,'b.',label="All Mineral Deposits")

ax.plot(comm.LONGITUDE,comm.LATITUDE,'yx',label=commname+" Deposits")

ax.plot(xval,yval,'grey',linestyle='--',linewidth=1,label='SA')

#ax.plot(comm.LONGITUDE, comm.LATITUDE, marker='o', linestyle='',markersize=5, color='y',label=commname+" Deposits")

plt.xlim(128.5,141.5)

plt.ylim(-38.5,-25.5)

plt.legend(loc=3)

plt.show()

png

Step 2 - Wrangle the geophysical and geological datasets (variable features)

Each geophysical dataset could offer instight into various commodities. Here we load in the pre-processed datasets and prepare them for further manipulations, data-mining, and machine learning. All of the full datasets are availble from https://map.sarig.sa.gov.au/. For this exercise we have simplified the datasets (reduced complexity and resolution). Grab full datasets from https://github.com/natbutter/gawler-exploration/tree/master/ML-DATA

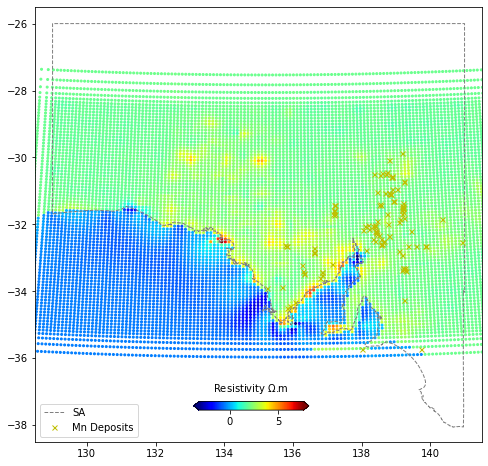

Resistivity xyz data

#Read in the data

data_res=pd.read_csv("data/AusLAMP_MT_Gawler_25.xyzr",

sep=',',header=0,names=['lat','lon','depth','resistivity'])

data_res| lat | lon | depth | resistivity | |

|---|---|---|---|---|

| 0 | -27.363931 | 128.680796 | -25.0 | 2.0007 |

| 1 | -27.659362 | 128.662322 | -25.0 | 1.9979 |

| 2 | -27.886602 | 128.647965 | -25.0 | 1.9948 |

| 3 | -28.061394 | 128.636833 | -25.0 | 1.9918 |

| 4 | -28.195844 | 128.628217 | -25.0 | 1.9885 |

| … | … | … | … | … |

| 11003 | -35.127716 | 142.399588 | -25.0 | 2.0079 |

| 11004 | -35.230939 | 142.408396 | -25.0 | 2.0084 |

| 11005 | -35.365124 | 142.419903 | -25.0 | 2.0085 |

| 11006 | -35.539556 | 142.434958 | -25.0 | 2.0076 |

| 11007 | -35.766303 | 142.454694 | -25.0 | 2.0049 |

11008 rows × 4 columns

This data is Lat-Lon spatial location and the value of the feature at that location.

fig = plt.figure(figsize=(8,8))

ax = plt.axes()

im=ax.scatter(data_res.lon,data_res.lat,s=4,c=data_res.resistivity,cmap="jet")

ax.plot(xval,yval,'grey',linestyle='--',linewidth=1,label='SA')

ax.plot(comm.LONGITUDE, comm.LATITUDE, marker='x', linestyle='',markersize=5, color='y',label=commname+" Deposits")

plt.xlim(128.5,141.5)

plt.ylim(-38.5,-25.5)

plt.legend(loc=3)

cbaxes = fig.add_axes([0.40, 0.18, 0.2, 0.015])

cbar = plt.colorbar(im, cax = cbaxes,orientation="horizontal",extend='both')

cbar.set_label('Resistivity $\Omega$.m', labelpad=10)

cbar.ax.xaxis.set_label_position('top')

plt.show()

png

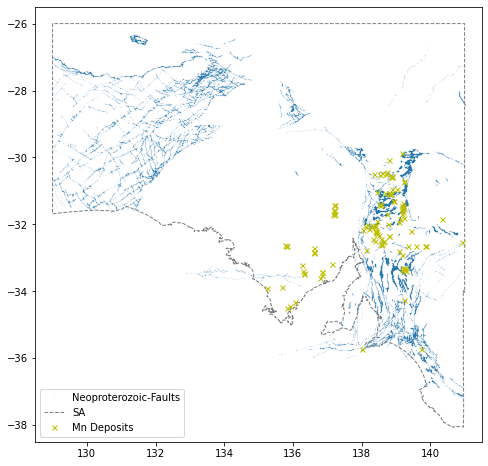

Faults and dykes vector polylines

# For dealing with arrays

import numpy as np#Get fault data neo

faultshape="data/Faults/Faults.shp"

shapeRead = shapefile.Reader(faultshape)

shapes = shapeRead.shapes()

Nshp = len(shapes)

faultsNeo=[]

for i in range(0,Nshp):

for j in shapes[i].points:

faultsNeo.append([j[0],j[1]])

faultsNeo=np.array(faultsNeo)

faultsNeoarray([[133.46269605, -27.41825034],

[133.46770683, -27.42062991],

[133.4723624 , -27.42259841],

...,

[138.44613353, -35.36560605],

[138.44160669, -35.36672662],

[138.43805501, -35.36793484]])This data is just a Lat-Lon location. Think how we can use this in a model.

fig = plt.figure(figsize=(8,8))

ax = plt.axes()

plt.plot(faultsNeo[:,0],faultsNeo[:,1],'.',markersize=0.1,label="Neoproterozoic-Faults")

ax.plot(xval,yval,'grey',linestyle='--',linewidth=1,label='SA')

ax.plot(comm.LONGITUDE, comm.LATITUDE, marker='x', linestyle='',markersize=5, color='y',label=commname+" Deposits")

plt.xlim(128.5,141.5)

plt.ylim(-38.5,-25.5)

plt.legend(loc=3)

plt.show()

png

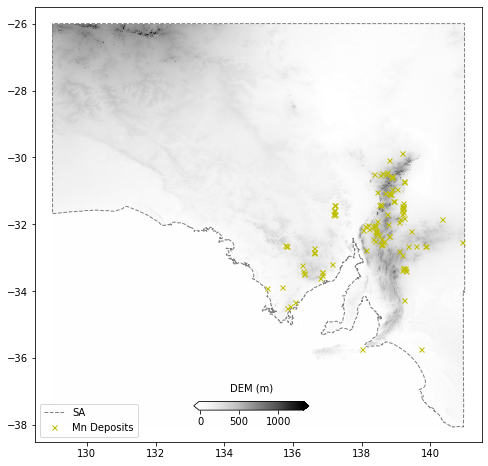

Netcdf formatted raster grids - geophysics

# For timing events

import time

# For making grids and reading netcdf data

import scipy

import scipy.io#Define a function to read the netcdf files

def readnc(filename):

tic=time.time()

rasterfile=filename

data = scipy.io.netcdf_file(rasterfile,'r',mmap=False)

xdata=data.variables['lon'][:]

ydata=data.variables['lat'][:]

zdata=np.array(data.variables['Band1'][:])

data.close()

toc=time.time()

print("Loaded", rasterfile, "in", f'{toc-tic:.2f}s')

print("Spacing x", f'{xdata[2]-xdata[1]:.2f}',

"y", f'{ydata[2]-ydata[1]:.2f}',

"Shape:", np.shape(zdata), "Min x:", np.min(xdata), "Max x:", np.max(xdata),

"Min y:", np.min(ydata), f'Max y {np.max(ydata):.2f}')

return(xdata,ydata,zdata,np.min(xdata),np.min(ydata),xdata[2]-xdata[1],ydata[2]-ydata[1])# Digital Elevation Model

x1,y1,z1,originx1,originy1,pixelx1,pixely1 = readnc("data/sa-dem.nc")

# Total Magnetic Intensity

x2,y2,z2,originx2,originy2,pixelx2,pixely2 = readnc("data/sa-mag-tmi.nc")

# Gravity

x3,y3,z3,originx3,originy3,pixelx3,pixely3 = readnc("data/sa-grav.nc")Loaded data/sa-dem.nc in 0.01s

Spacing x 0.01 y 0.01 Shape: (1208, 1201) Min x: 129.005 Max x: 141.005 Min y: -38.065 Max y -25.99

Loaded data/sa-mag-tmi.nc in 0.00s

Spacing x 0.01 y 0.01 Shape: (1208, 1201) Min x: 129.005 Max x: 141.005 Min y: -38.065 Max y -25.99

Loaded data/sa-grav.nc in 0.01s

Spacing x 0.01 y 0.01 Shape: (1208, 1201) Min x: 129.005 Max x: 141.005 Min y: -38.065 Max y -25.99fig = plt.figure(figsize=(8,8))

ax = plt.axes()

im=plt.pcolormesh(x1,y1,z1,cmap='Greys',shading='auto')

ax.plot(xval,yval,'grey',linestyle='--',linewidth=1,label='SA')

ax.plot(comm.LONGITUDE, comm.LATITUDE, marker='x', linestyle='',markersize=5, color='y',label=commname+" Deposits")

plt.xlim(128.5,141.5)

plt.ylim(-38.5,-25.5)

plt.legend(loc=3)

cbaxes = fig.add_axes([0.40, 0.18, 0.2, 0.015])

cbar = plt.colorbar(im, cax = cbaxes,orientation="horizontal",extend='both')

cbar.set_label('DEM (m)', labelpad=10)

cbar.ax.xaxis.set_label_position('top')

plt.show()

png

These data are raster grids. Essentially Lat-Lon-Value like the XYZ data, but represented in a different format.

Categorical Geology in vector polygons

#Archean basement geology

geolshape=shapefile.Reader("data/Archaean_Early_Mesoprterzoic_polygons_shp/geology_archaean.shp")

recsArch = geolshape.records()

shapesArch = geolshape.shapes()# Print the field names in the shapefile

for i,field in enumerate(geolshape.fields):

print(i-1,field[0]) -1 DeletionFlag

0 MAJORSTRAT

1 SG_DESCRIP

2 MAPUNIT

3 SG_PROVINC

4 DOMAIN

5 AGE

6 SEQUSET

7 PRIMARYAGE

8 OROGENYAGE

9 INHERITAGE

10 STRATNO

11 STRATNAME

12 STRATDESC

13 GISCODE

14 SUBDIVNAME

15 SUBDIVSYMB

16 PROVINCE

17 MAXAGE

18 MAXMOD

19 MAXMETH

20 MINAGE

21 MINMOD

22 MINMETH

23 GLCODEfig = plt.figure(figsize=(8,8))

ax = plt.axes()

#index of the geology unit #4 #10 #12

geoindex = 4

#Gather all the unique Major Geology unit numbers

labs=[]

for i in recsArch:

labs.append(i[geoindex])

geols = list(set(labs))

# Create a unique color for each geological unit label

color = plt.cm.tab20(np.linspace(0, 1, len(geols)))

cdict={}

for i, geol in enumerate(geols):

cdict.update({geol:color[i]})

#Plot each of the geology polygons

legend1=[]

for i in range(len(shapesArch)):

boundary = shapesArch[i].points

xs = [x for x, y in shapesArch[i].points]

ys = [y for x, y in shapesArch[i].points]

c = cdict[recsArch[i][geoindex]]

l1 = ax.fill(xs,ys,c=c,label=recsArch[i][geoindex])

legend1.append(l1)

#Plot the extra stuff

l2 = ax.plot(xval,yval,'grey',linestyle='--',linewidth=1,label='SA')

l3 = ax.plot(comm.LONGITUDE, comm.LATITUDE,

marker='s', markeredgecolor='k', linestyle='',markersize=4, color='y',

label=commname+" Deposits")

#Todo: Split the legends

#ax.legend([l2,l3],['SA',commname+" Deposits"],loc=3)

#Legend without duplicate values

handles, labels = ax.get_legend_handles_labels()

unique = [(h, l) for i, (h, l) in enumerate(zip(handles, labels)) if l not in labels[:i]]

ax.legend(*zip(*unique), bbox_to_anchor = (1.02, 1.01), ncol=3)

plt.xlim(128.5,141.5)

plt.ylim(-38.5,-25.5)

#plt.legend(loc=3) #bbox_to_anchor = (1.05, 0.6))

plt.show()

png

Take a moment to appreciate the various methods you have used just to load the data!

Now we need to think about what we actually want to achieve? What is our goal here? This will determine what kind of data analysis/manipulation we need to make here. Consider the flow diagram for choosing the right machine learning method.

Step 3 - Assign geophys values to target locations

We need to assign the values of each of these geophyiscal datasets (predictor variables) to the target class (i.e. mineral deposit locations). The assumption being that the occurnece of some mineral deposit (e.g. Cu) is a function of x1, x2, x3, x4, x5, x6. Where the Resitivity is x1, the distance to a Neoprotezoic fault is x2, the value of DEM, magnetic TMI, and Gravity is x3, x4, and x5, and the geologica basement unit is x6.

# Make a Target DataFrame of the points we want to interrogate the features for

td1 = comm[['LONGITUDE', 'LATITUDE']].copy()Resistivity

# For making KD Trees

import scipy.spatial# Define a function which "coregisters" a point from a bunch of other points.

def coregPoint(tree,point,region,retval='index'):

'''

Finds the nearest neighbour to a point from a bunch of other points

tree - a scipy CKTree to search for the point over

point - array([longitude,latitude])

region - integer, same units as data

'''

dists, indexes = tree.query(point,k=1,distance_upper_bound=region)

if retval=='index':

return (indexes)

elif retval=='dists':

return(dists)

# Find the values of the resetivity grid for each lat/lon deposit location.

# Make a search-tree of the point-pairs for fast lookup of nearest matches

treeres = scipy.spatial.cKDTree(np.c_[data_res.lon,data_res.lat])

# Perform the search for each point

indexes = td1.apply(

lambda x: coregPoint(treeres,np.array([x.LONGITUDE, x.LATITUDE]),1,retval='index'), axis=1)td1['res'] = data_res.loc[indexes].resistivity.values

td1| LONGITUDE | LATITUDE | res | |

|---|---|---|---|

| 0 | 139.179436 | -29.877637 | 2.2135 |

| 1 | 138.808767 | -30.086296 | 2.3643 |

| 2 | 138.752281 | -30.445684 | 2.1141 |

| 3 | 138.530506 | -30.533225 | 2.2234 |

| 4 | 138.887019 | -30.565479 | 2.1982 |

| … | … | … | … |

| 110 | 136.059715 | -34.327929 | 3.4926 |

| 111 | 138.016821 | -35.733084 | 2.0868 |

| 112 | 139.250036 | -34.250155 | 1.9811 |

| 113 | 135.905480 | -34.425866 | 2.7108 |

| 114 | 135.835578 | -34.509779 | 3.1224 |

115 rows × 3 columns

Faults

#Same for the fault data

# but this time we get the "distance to the point", rather than the value at that point.

treefaults = scipy.spatial.cKDTree(faultsNeo)

dists = td1.apply(

lambda x: coregPoint(treefaults,np.array([x.LONGITUDE, x.LATITUDE]),100,retval='dists'), axis=1)td1['faults'] = dists

td1| LONGITUDE | LATITUDE | res | faults | |

|---|---|---|---|---|

| 0 | 139.179436 | -29.877637 | 2.2135 | 0.010691 |

| 1 | 138.808767 | -30.086296 | 2.3643 | 0.103741 |

| 2 | 138.752281 | -30.445684 | 2.1141 | 0.006659 |

| 3 | 138.530506 | -30.533225 | 2.2234 | 0.013925 |

| 4 | 138.887019 | -30.565479 | 2.1982 | 0.007356 |

| … | … | … | … | … |

| 110 | 136.059715 | -34.327929 | 3.4926 | 0.526835 |

| 111 | 138.016821 | -35.733084 | 2.0868 | 0.002451 |

| 112 | 139.250036 | -34.250155 | 1.9811 | 0.027837 |

| 113 | 135.905480 | -34.425866 | 2.7108 | 0.670323 |

| 114 | 135.835578 | -34.509779 | 3.1224 | 0.776152 |

115 rows × 4 columns

Geophysics

# Define a function which "coregisters" a point within a raster.

def get_coords_at_point(originx,originy,pixelx,pixely,lon,lat):

'''

Given a point in some coordinate reference (e.g. lat/lon)

Find the closest point to that in an array (e.g. a raster)

and return the index location of that point in the raster.

INPUTS

"output from "gdal_data.GetGeoTransform()"

originx: first point in first axis

originy: first point in second axis

pixelx: difference between x points

pixely: difference between y points

lon: x/row-coordinate of interest

lat: y/column-coordinate of interest

RETURNS

col: x index value from the raster

row: y index value from the raster

'''

row = int((lon - originx)/pixelx)

col = int((lat - originy)/pixely)

return (col, row)

# Pass entire array of latlon and raster info to us in get_coords_at_point

def rastersearch(latlon,raster,originx,originy,pixelx,pixely):

zlist=[]

for lon,lat in zip(latlon.LONGITUDE,latlon.LATITUDE):

try:

zlist.append(raster[get_coords_at_point(originx,originy,pixelx,pixely,lon,lat)])

except:

zlist.append(np.nan)

return(zlist)td1['dem'] = rastersearch(td1,z1,originx1,originy1,pixelx1,pixely1)

td1['mag'] = rastersearch(td1,z2,originx2,originy2,pixelx2,pixely2)

td1['grav'] = rastersearch(td1,z3,originx3,originy3,pixelx3,pixely3)td1| LONGITUDE | LATITUDE | res | faults | dem | mag | grav | |

|---|---|---|---|---|---|---|---|

| 0 | 139.179436 | -29.877637 | 2.2135 | 0.010691 | 187.297424 | -118.074890 | 1.852599 |

| 1 | 138.808767 | -30.086296 | 2.3643 | 0.103741 | 179.499237 | -209.410507 | -12.722121 |

| 2 | 138.752281 | -30.445684 | 2.1141 | 0.006659 | 398.336823 | -159.566422 | -6.249788 |

| 3 | 138.530506 | -30.533225 | 2.2234 | 0.013925 | 335.983429 | -131.176437 | -11.665316 |

| 4 | 138.887019 | -30.565479 | 2.1982 | 0.007356 | 554.278198 | -192.363297 | -1.025702 |

| … | … | … | … | … | … | … | … |

| 110 | 136.059715 | -34.327929 | 3.4926 | 0.526835 | 45.866119 | -244.067841 | 11.410070 |

| 111 | 138.016821 | -35.733084 | 2.0868 | 0.002451 | 145.452789 | -203.566940 | 18.458364 |

| 112 | 139.250036 | -34.250155 | 1.9811 | 0.027837 | 276.489319 | -172.889587 | -1.714886 |

| 113 | 135.905480 | -34.425866 | 2.7108 | 0.670323 | 162.431747 | 569.713684 | 15.066316 |

| 114 | 135.835578 | -34.509779 | 3.1224 | 0.776152 | 89.274399 | 64.385925 | 24.267015 |

115 rows × 7 columns

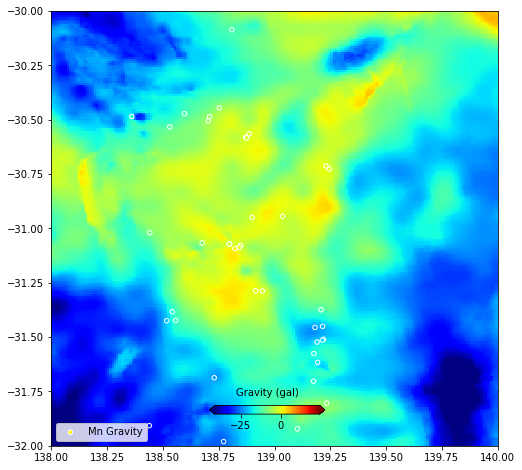

# Check we got it right.

# Plot a grid, and our interrogated points

fig = plt.figure(figsize=(8,8))

ax = plt.axes()

im=plt.pcolormesh(x3,y3,z3,cmap='jet',shading='auto',vmin=min(td1.grav),vmax=max(td1.grav))

#ax.plot(xval,yval,'grey',linestyle='--',linewidth=1,label='SA')

#ax.plot(comm.LONGITUDE, comm.LATITUDE, marker='o', linestyle='',markersize=5, color='y',label=commname+" Deposits")

ax.scatter(td1.LONGITUDE, td1.LATITUDE, s=20, c=td1.grav,

label=commname+" Gravity",cmap='jet',vmin=min(td1.grav),vmax=max(td1.grav),edgecolors='white')

plt.xlim(138,140)

plt.ylim(-32,-30)

plt.legend(loc=3)

cbaxes = fig.add_axes([0.40, 0.18, 0.2, 0.015])

cbar = plt.colorbar(im, cax = cbaxes,orientation="horizontal",extend='both')

cbar.set_label('Gravity (gal)', labelpad=10)

cbar.ax.xaxis.set_label_position('top')

plt.show()

png

Geology

# For dealing with shapefile components

from shapely.geometry import Point

from shapely.geometry import shape

#Define a function to find what polygon a point lives inside (speed imporivements can be made here)

def shapeExplore(lon,lat,shapes,recs,record):

#'record' is the column index you want returned

for i in range(len(shapes)):

boundary = shapes[i]

if Point((lon,lat)).within(shape(boundary)):

return(recs[i][record])

#if you have been through the loop with no result

return('-9999')%%time

geoindex = 4

td1['geol']=td1.apply(lambda x: shapeExplore(x.LONGITUDE, x.LATITUDE, shapesArch,recsArch,geoindex), axis=1)CPU times: user 9.63 s, sys: 11.8 ms, total: 9.64 s

Wall time: 9.65 std1| LONGITUDE | LATITUDE | res | faults | dem | mag | grav | geol | |

|---|---|---|---|---|---|---|---|---|

| 0 | 139.179436 | -29.877637 | 2.2135 | 0.010691 | 187.297424 | -118.074890 | 1.852599 | Crustal element Muloorina |

| 1 | 138.808767 | -30.086296 | 2.3643 | 0.103741 | 179.499237 | -209.410507 | -12.722121 | Crustal element Adelaide |

| 2 | 138.752281 | -30.445684 | 2.1141 | 0.006659 | 398.336823 | -159.566422 | -6.249788 | Crustal element Adelaide |

| 3 | 138.530506 | -30.533225 | 2.2234 | 0.013925 | 335.983429 | -131.176437 | -11.665316 | Crustal element Adelaide |

| 4 | 138.887019 | -30.565479 | 2.1982 | 0.007356 | 554.278198 | -192.363297 | -1.025702 | Crustal element Adelaide |

| … | … | … | … | … | … | … | … | … |

| 110 | 136.059715 | -34.327929 | 3.4926 | 0.526835 | 45.866119 | -244.067841 | 11.410070 | Cleve, Spencer, Olympic Domains |

| 111 | 138.016821 | -35.733084 | 2.0868 | 0.002451 | 145.452789 | -203.566940 | 18.458364 | Crustal element Kanmantoo SW |

| 112 | 139.250036 | -34.250155 | 1.9811 | 0.027837 | 276.489319 | -172.889587 | -1.714886 | Crustal element Kanmantoo Main |

| 113 | 135.905480 | -34.425866 | 2.7108 | 0.670323 | 162.431747 | 569.713684 | 15.066316 | Cleve Domain |

| 114 | 135.835578 | -34.509779 | 3.1224 | 0.776152 | 89.274399 | 64.385925 | 24.267015 | Cleve Domain |

115 rows × 8 columns

Congrats, you now have an ML dataset ready to go!

Almost… but what is the target? Let’s make a binary classifier.

Step 4 - Generate a “non-deposit” dataset

We have a set of locations where a certain mineral deposit occurs along with the values of various geophysical parameters at those locations. To identify what values of the geophysics are associated with a mineral deposit then we need a representation of the “background noise” of those parameters, i.e. what the values are when there is no mineral deposit.

This step is important. There are numerous ways to generate our non-deposit set, each with different benefits and trade-offs. The randomisation of points throughout some domain appears to be robust. But you must think, is this domain a reasonable estimation of “background” geophysics/geology? Why are you picking these locations as non-deposits? Will they be over/under-representing actual deposits? Will they be over/under-representing actual non-deposits?

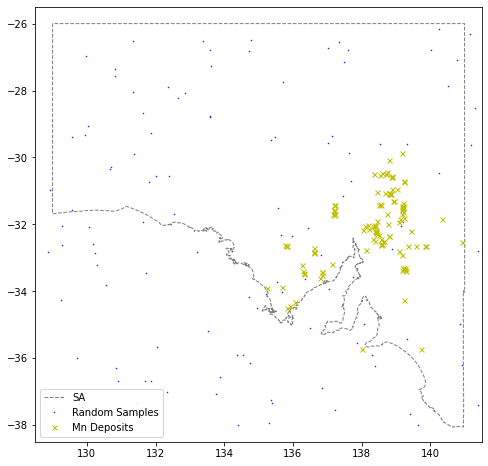

#Now make a set of "non-deposits" using a random location within our exploration area

lats_rand=np.random.uniform(low=min(df.LATITUDE), high=max(df.LATITUDE), size=len(comm.LATITUDE))

lons_rand=np.random.uniform(low=min(df.LONGITUDE), high=max(df.LONGITUDE), size=len(comm.LONGITUDE))

print("Produced", len(lats_rand),len(lons_rand), "latitude-longitude pairs for non-deposits.")Produced 115 115 latitude-longitude pairs for non-deposits.# Where are these randomised "non deposits"

fig = plt.figure(figsize=(8,8))

ax = plt.axes()

ax.plot(xval,yval,'grey',linestyle='--',linewidth=1,label='SA')

ax.plot(lons_rand, lats_rand,

marker='.', linestyle='',markersize=1, color='b',label="Random Samples")

ax.plot(td1.LONGITUDE, td1.LATITUDE,

marker='x', linestyle='',markersize=5, color='y',label=commname+" Deposits")

plt.xlim(128.5,141.5)

plt.ylim(-38.5,-25.5)

plt.legend(loc=3)

plt.show()

png

We must do the same coregistration/interrogation of the different data layers for our randomised “non-deposit” data.

%%time

td2 = pd.DataFrame({'LONGITUDE': lons_rand, 'LATITUDE': lats_rand})

# Res

indexes = td2.apply(

lambda x: coregPoint(treeres,np.array([x.LONGITUDE, x.LATITUDE]),10,retval='index'), axis=1)

td2['res'] = data_res.loc[indexes].resistivity.values

# Faults

td2['faults'] = td2.apply(

lambda x: coregPoint(treefaults,np.array([x.LONGITUDE, x.LATITUDE]),100,retval='dists'), axis=1)

# Geophys

td2['dem'] = rastersearch(td2,z1,originx1,originy1,pixelx1,pixely1)

td2['mag'] = rastersearch(td2,z2,originx2,originy2,pixelx2,pixely2)

td2['grav'] = rastersearch(td2,z3,originx3,originy3,pixelx3,pixely3)

#Geology

td2['geol']=td2.apply(lambda x: shapeExplore(x.LONGITUDE, x.LATITUDE, shapesArch,recsArch,geoindex), axis=1)CPU times: user 22.7 s, sys: 23.8 ms, total: 22.8 s

Wall time: 22.8 s#Add flag indicating classification label

td1['deposit']=1

td2['deposit']=0fv = pd.concat([td1,td2],axis=0,ignore_index=True)

fv| LONGITUDE | LATITUDE | res | faults | … | mag | grav | geol | deposit | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 139.179436 | -29.877637 | 2.2135 | 0.010691 | … | -118.074890 | 1.852599 | Crustal element Muloorina | 1 |

| 1 | 138.808767 | -30.086296 | 2.3643 | 0.103741 | … | -209.410507 | -12.722121 | Crustal element Adelaide | 1 |

| 2 | 138.752281 | -30.445684 | 2.1141 | 0.006659 | … | -159.566422 | -6.249788 | Crustal element Adelaide | 1 |

| 3 | 138.530506 | -30.533225 | 2.2234 | 0.013925 | … | -131.176437 | -11.665316 | Crustal element Adelaide | 1 |

| 4 | 138.887019 | -30.565479 | 2.1982 | 0.007356 | … | -192.363297 | -1.025702 | Crustal element Adelaide | 1 |

| … | … | … | … | … | … | … | … | … | … |

| 225 | 141.316422 | -28.535431 | 1.9873 | 0.318785 | … | NaN | NaN | -9999 | 0 |

| 226 | 135.352957 | -37.255236 | -0.5148 | 1.402739 | … | 0.000000 | 0.000000 | -9999 | 0 |

| 227 | 140.865975 | -34.989399 | 2.0438 | 0.945575 | … | -63.256947 | -20.687813 | Crustal element Glenelg | 0 |

| 228 | 130.191418 | -32.579327 | -0.7268 | 0.992523 | … | 0.000000 | 0.000000 | ?Fowler Domain | 0 |

| 229 | 137.454301 | -31.160760 | 1.9846 | 0.585865 | … | 21.408169 | -20.863804 | Cleve, Spencer, Olympic Domains | 0 |

230 rows × 9 columns

# Save all our hard work to a csv file for more hacking to come!

fv.to_csv('data/fv.csv',index=False)Exploratory Data Analysis

This is often the point you recieve the data in (if you are using any well-formed datasets). But in reality 90% of the time is doing weird data wrangling steps like what we have done. Then 9% is spent exploring your dataset and understanding it more, dealing with missing data, observing correlations. This is often an iterative process. Let’s do some simple visualisations.

Note: the last 1% of time is actually applying the ML algorithms!

#Get information about index type and column types, non-null values and memory usage.

fv.info()<class 'pandas.core.frame.DataFrame'>

RangeIndex: 230 entries, 0 to 229

Data columns (total 9 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 LONGITUDE 230 non-null float64

1 LATITUDE 230 non-null float64

2 res 230 non-null float64

3 faults 230 non-null float64

4 dem 225 non-null float64

5 mag 225 non-null float64

6 grav 225 non-null float64

7 geol 230 non-null object

8 deposit 230 non-null int64

dtypes: float64(7), int64(1), object(1)

memory usage: 16.3+ KB# For nice easy data vis plots

import seaborn as snsmissingNo = fv.isnull().sum(axis = 0).sort_values(ascending = False)

missingNo = missingNo[missingNo.values > 0]

missingNo

sns.barplot(missingNo.values, missingNo.index);/home/ubuntu/miniconda3/lib/python3.9/site-packages/seaborn/_decorators.py:36: FutureWarning: Pass the following variables as keyword args: x, y. From version 0.12, the only valid positional argument will be `data`, and passing other arguments without an explicit keyword will result in an error or misinterpretation.

warnings.warn(

png

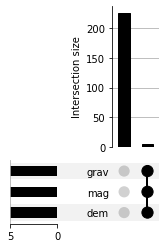

import upsetplotmissing_cols = missingNo.index[:5].tolist()

missing_counts = (fv.loc[:, missing_cols]

.isnull()

.groupby(missing_cols)

.size())

upsetplot.plot(missing_counts);

png

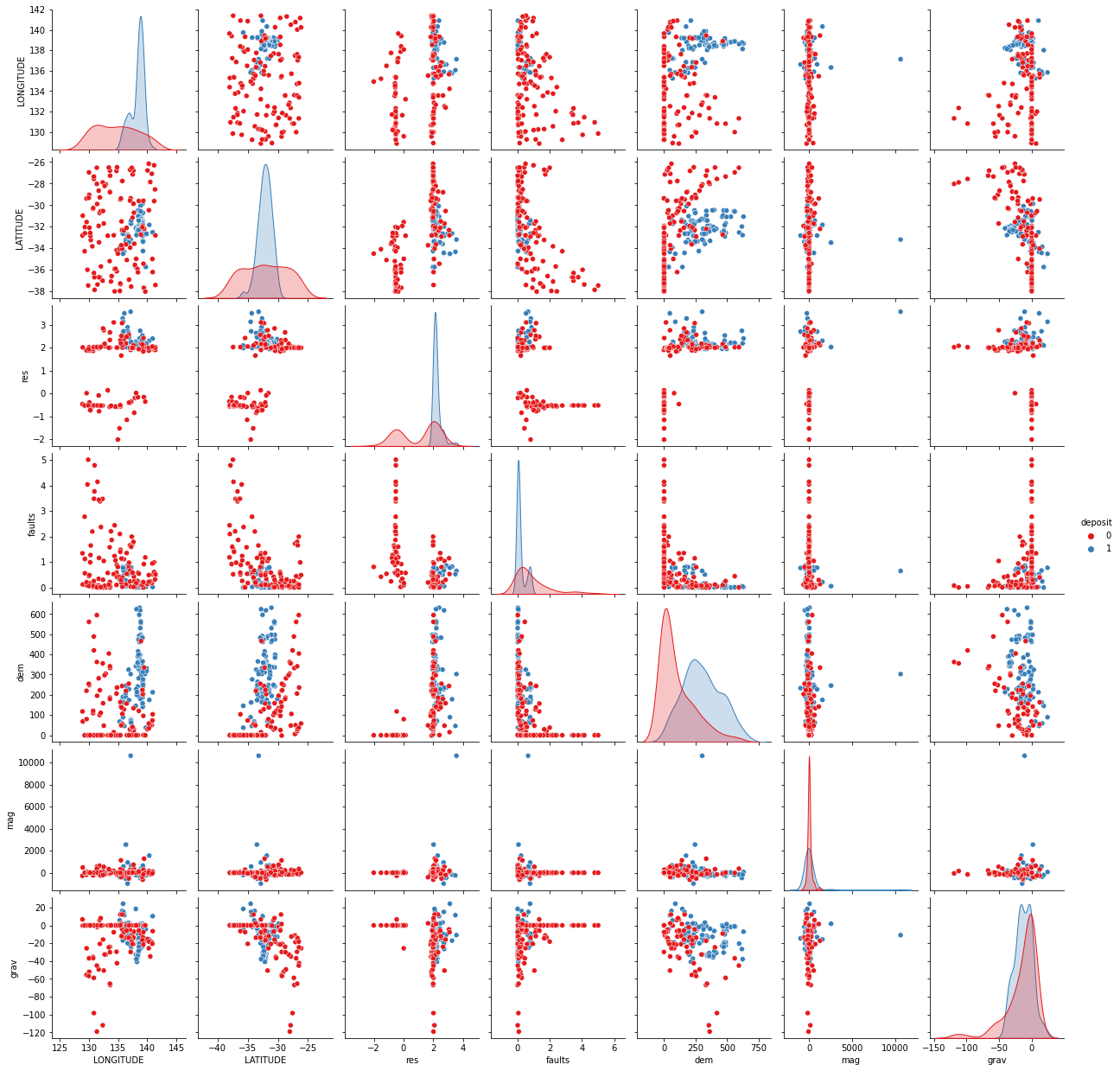

# Plot historgrams and scatter plots for each combination of features.

sns.pairplot(fv,hue='deposit',palette="Set1",diag_kind="auto")<seaborn.axisgrid.PairGrid at 0x7f325381e190>

png

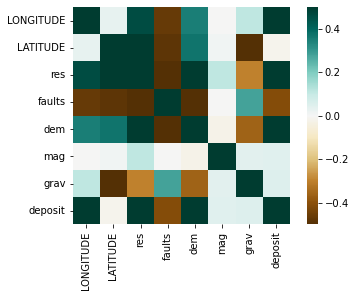

#Plot a heatmap for how correlated each of the features are

corr = fv.corr()

sns.heatmap(corr,

cmap=plt.cm.BrBG,

vmin=-0.5, vmax=0.5,

square=True,

xticklabels=True, yticklabels=True,

);

png

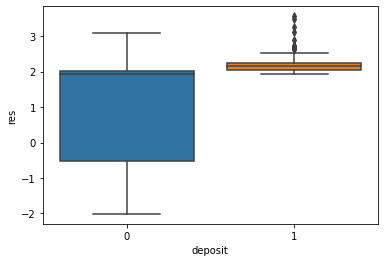

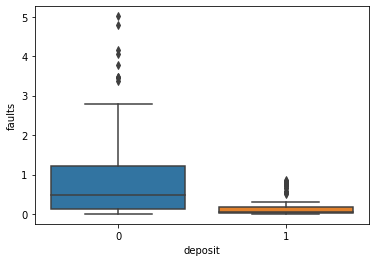

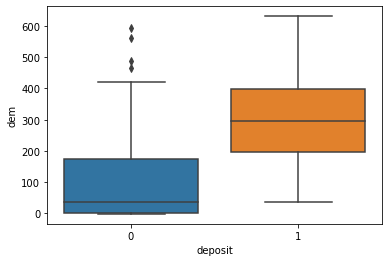

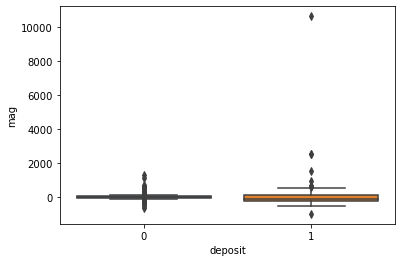

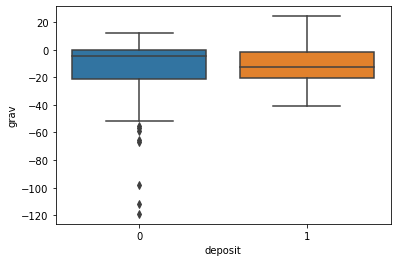

for i in ['res', 'faults', 'dem', 'mag', 'grav']:

ax = sns.boxplot(x='deposit',y=i, data=fv)

plt.show()

png

png

png

png

png

Machine Learning

We now have a clean dataset, we know a bit about, let’s try and measure some inferences.

ML Classification

This is where the ML classifier is defined. We can substitue our favourite ML technique here, and tune model variables as desired. As always the scikit-learn documentation is a great starting point to learn how these algorithms work.

from sklearn.pipeline import Pipeline

from sklearn.impute import SimpleImputer

from sklearn.preprocessing import StandardScaler

from sklearn.preprocessing import OneHotEncoder

from sklearn.compose import ColumnTransformer

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import cross_val_score

from sklearn.neural_network import MLPClassifier#Create the 'feature vector' and a 'target classification vector'

features=fv.dropna().iloc[:,2:-1]

targets=fv.dropna().deposit

features.columnsIndex(['res', 'faults', 'dem', 'mag', 'grav', 'geol'], dtype='object')numfts = ['res', 'faults', 'dem', 'mag', 'grav']

catfts = ['geol']for i in features.geol:

if not isinstance(i, str):

print(i)#Create the ML classifier with numerical and categorical data

#Scale, and replace missing values

numeric_transformer = Pipeline(steps=[

('imputer',SimpleImputer(missing_values=-9999., strategy='median')),

('scaler', StandardScaler())])

#Encode categorical data and fill missing values with default 0

categorical_transformer = Pipeline(steps=[

('imputer', SimpleImputer(strategy='constant')),

('onehot', OneHotEncoder(handle_unknown='ignore'))])

#Combine numerical and categorical data

preprocessor = ColumnTransformer(transformers=[

('num', numeric_transformer, numfts),

('cat', categorical_transformer, catfts)])

# Append classifier to preprocessing pipeline.

# Now we have a full prediction pipeline.

rf = Pipeline(steps=[('preprocessor', preprocessor),

('classifier', RandomForestClassifier(random_state=1))])

#('classifier', MLPClassifier(solver='adam', alpha=0.001, max_iter=10000))])

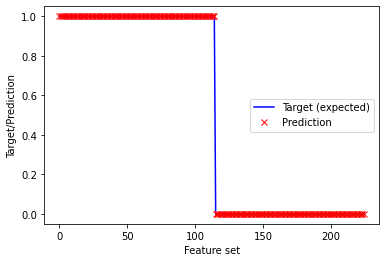

print('Tranining the Clasifier...')

rf.fit(features,targets)

print("Done RF. Now scoring...")

scores = cross_val_score(rf, features,targets, cv=5)

print("RF 5-fold cross validation Scores:", scores)

print("SCORE Mean: %.2f" % np.mean(scores), "STD: %.2f" % np.std(scores), "\n")

plt.plot(targets.values,'b-',label='Target (expected)')

plt.plot(rf.predict(features),'rx',label='Prediction')

plt.xlabel("Feature set")

plt.ylabel("Target/Prediction")

plt.legend(loc=7)Tranining the Clasifier...

Done RF. Now scoring...

RF 5-fold cross validation Scores: [0.93333333 0.97777778 0.91111111 0.88888889 0.73333333]

SCORE Mean: 0.89 STD: 0.08

<matplotlib.legend.Legend at 0x7f3251577a60>

png

print("Features:",np.shape(features),"Targets:",np.shape(targets))

rf.fit(features,targets)

scores = cross_val_score(rf, features,targets, cv=5)

print("RF CV-Scores: ",scores)

print("CV-SCORE Mean: %.2f" % np.mean(scores), "STD: %.2f" % np.std(scores))

#print("OOB score:",rf.steps[-1][1].oob_score_)

print("Targets (expected result):")

print(targets.values)

print("Prediction (actual result):")

print(rf.predict(features))Features: (225, 6) Targets: (225,)

RF CV-Scores: [0.93333333 0.97777778 0.91111111 0.88888889 0.73333333]

CV-SCORE Mean: 0.89 STD: 0.08

Targets (expected result):

[1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

1 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0]

Prediction (actual result):

[1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

1 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

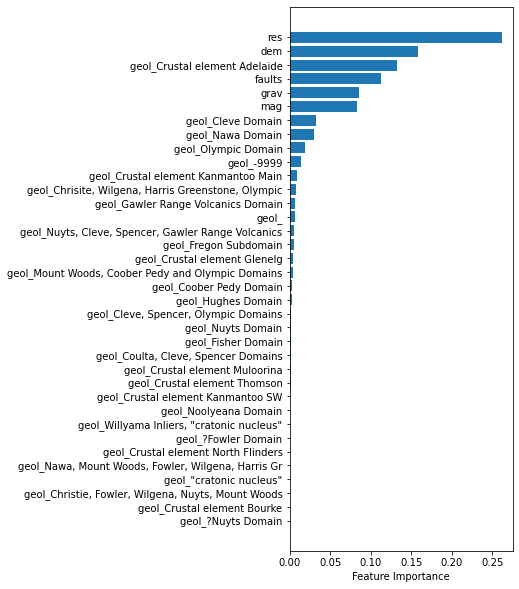

0 0 0]# Gather the importance measures

ft_imp=[]

ft_lab=[]

for i,lab in enumerate(np.append(numfts,rf['preprocessor'].transformers_[1][1]['onehot'].get_feature_names(catfts))):

ft_imp.append(rf.steps[-1][1].feature_importances_[i])

ft_lab.append(lab)#Make the bar plot

ft_imps, ft_labs = (list(t) for t in zip(*sorted(zip(ft_imp,ft_lab))))

datalength=len(ft_imp)

#Create a new figure

fig,ax = plt.subplots(figsize=(4,10))

#Plot the bar graph

rects=ax.barh(np.arange(0, datalength, step=1),ft_imps)

ax.set_yticks(np.arange(0, datalength, step=1))

ax.set_yticklabels(ft_labs)

ax.set_xlabel('Feature Importance')

print("From the Random Forest ML algorithm,")

print("these are the the most significant features for predicting the target bins\n")

plt.show()From the Random Forest ML algorithm,

these are the the most significant features for predicting the target bins

png

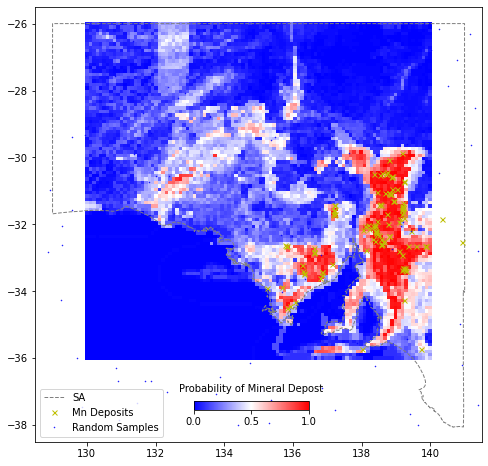

Find where to dig!

Now we have a trained model we can pass it NEW data, that is values for all the geopysical parameters, and it will give us a prediction for whether there is a deposit there or not. Simple.

res1= [2.2,2.2]

faults1 = [0.01,2.2]

dem1 = [187,2.2]

mag1= [-118,2.2]

grav = [1.8,2.2]

geol = ['Crustal element Muloorina','Crustal element Muloorina']

targets = pd.DataFrame({'res':res1,'faults':faults1,'dem':dem1,'mag':mag1,'grav':grav,'geol':geol})

print(targets)

#print(targets.reshape(1, -11))

rf.predict_proba(targets) res faults dem mag grav geol

0 2.2 0.01 187.0 -118.0 1.8 Crustal element Muloorina

1 2.2 2.20 2.2 2.2 2.2 Crustal element Muloorina

array([[0.13, 0.87],

[0.79, 0.21]])#100x100 takes about 1 hour! 10x10 takes about 1 minute

# Make a grid of target locations

grid_x, grid_y = np.mgrid[130:140:100j,-36:-26:100j]%%time

tdf = pd.DataFrame({'LONGITUDE': grid_x.reshape(grid_x.size), 'LATITUDE': grid_y.reshape(grid_y.size)})

# Res

indexes = tdf.apply(

lambda x: coregPoint(treeres,np.array([x.LONGITUDE, x.LATITUDE]),10,retval='index'), axis=1)

tdf['res'] = data_res.loc[indexes].resistivity.values

print("Done res")

# Faults

tdf['faults'] = tdf.apply(

lambda x: coregPoint(treefaults,np.array([x.LONGITUDE, x.LATITUDE]),100,retval='dists'), axis=1)

print("Done faults")

# Geophys

tdf['dem'] = rastersearch(tdf,z1,originx1,originy1,pixelx1,pixely1)

tdf['mag'] = rastersearch(tdf,z2,originx2,originy2,pixelx2,pixely2)

tdf['grav'] = rastersearch(tdf,z3,originx3,originy3,pixelx3,pixely3)

print("Done rasters")

# Geology

tdf['geol']=tdf.apply(lambda x: shapeExplore(x.LONGITUDE, x.LATITUDE, shapesArch,recsArch,geoindex), axis=1)

print("Done!")Done res

Done faults

Done rasters

Done!

CPU times: user 24min 55s, sys: 1.32 s, total: 24min 56s

Wall time: 24min 57s# Save all our hard work to a csv file for more hacking to come!

tdf.to_csv('data/tdf_100.csv',index=False)#Apply the trained ML to our gridded data to determine the probabilities at each of the points

print('RF...')

pRF_map=np.array(rf.predict_proba(tdf.iloc[:,2:]))

print("Done RF")RF...

Done RF#X, Y = np.meshgrid(xi, yi)

gridZ = scipy.interpolate.griddata((tdf.LONGITUDE, tdf.LATITUDE), pRF_map[:,1], (grid_x, grid_y),method='linear')fig = plt.figure(figsize=(8,8))

ax = plt.axes()

im=plt.pcolormesh(grid_x,grid_y,gridZ,cmap='bwr',shading='auto')

ax.plot(xval,yval,'grey',linestyle='--',linewidth=1,label='SA')

ax.plot(fv[fv.deposit==1].LONGITUDE, fv[fv.deposit==1].LATITUDE,

marker='x', linestyle='',markersize=5, color='y',label=commname+" Deposits")

ax.plot(fv[fv.deposit==0].LONGITUDE, fv[fv.deposit==0].LATITUDE,

marker='.', linestyle='',markersize=1, color='b',label="Random Samples")

plt.xlim(128.5,141.5)

plt.ylim(-38.5,-25.5)

plt.legend(loc=3)

cbaxes = fig.add_axes([0.40, 0.18, 0.2, 0.015])

cbar = plt.colorbar(im, cax = cbaxes,orientation="horizontal")

cbar.set_label('Probability of Mineral Depost', labelpad=10)

cbar.ax.xaxis.set_label_position('top')

plt.show()

png